Return of the Lisp Machine: How the Antitrust Remedy of 2025 Forced Google into Hardware Determinism

Date: December 8, 2025 Location: Mountain House, CA Theme: Legal History & Computer Architecture

Abstract

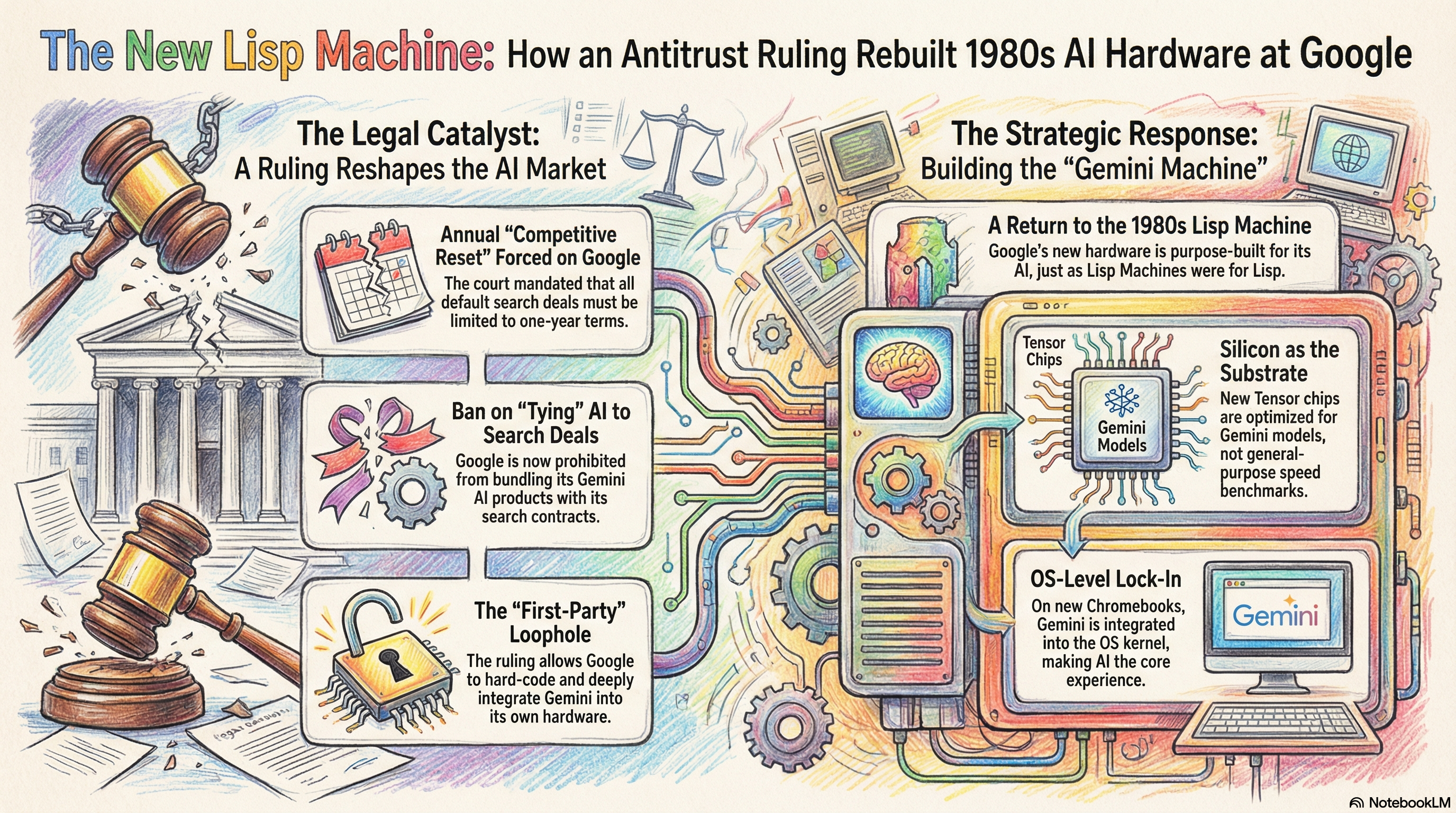

The December 5, 2025 remedial order by Judge Amit Mehta in United States v. Google LLC has fundamentally altered the economic incentives of the internet's dominant search provider. By mandating annual renegotiations for default search placement and prohibiting the bundling of AI products with search contracts, the court has inadvertently resurrected a dormant philosophy in computer science: the specialized "language machine." This paper argues that Google’s strategic pivot—from "renting" users on general-purpose devices (iPhones) to building vertically integrated "Gemini Machines" (Pixel, Chromebook Plus, Project Aura)—mirrors the rise of Lisp Machines in the 1980s. We are witnessing a shift from the Von Neumann era of general-purpose computing to a new era of Inference-Native architectures, where the hardware is physically optimized to run the model as the operating system.

I. The Legal Catalyst: The "One-Year" Shock

On Friday, December 5, 2025, the U.S. District Court for the District of Columbia issued a remedial order that dismantled the "security of tenure" Google enjoyed for two decades [1]1. The ruling mandates that all default search agreements (e.g., with Apple and Samsung) must now be limited to one year in duration. 2 Furthermore, it explicitly bans the "tying" of generative AI products (Gemini) to these lucrative search revenue-share deals [2]3.

This legal shock creates a "volatility trap" for Google. The company can no longer use its search monopoly to guarantee the distribution of its AI models. In the words of Judge Mehta, the goal is to force an annual "competitive reset" [1]4. For Google, the logical counter-move is to retreat to a "Safe Harbor"—a hardware environment where they write the rules. The ruling rejected a ban on "self-preferencing" for first-party devices, legally sanctioning Google’s ability to hard-code Gemini into the silicon of its own products [3].

II. Historical Parallel: The Lisp Machine (1979–1988)

To understand Google's 2025 hardware strategy, one must look to the MIT AI Lab in the late 1970s. At the time, the "Lisp" programming language was the standard for AI research, but it was too resource-intensive for commodity hardware (like the DEC PDP-10). 5 The solution was the Lisp Machine (commercialized by Symbolics and Lisp Machines Inc.): a computer where the hardware architecture was designed specifically to execute Lisp instructions [4]6.

Key Characteristics of the Lisp Machine:

- Tag-Bit Architecture: The hardware natively understood Lisp data types (lists, atoms) at the instruction level.

- Unified Memory: The OS and the user applications shared a single address space; "garbage collection" was a hardware-assisted process. 7

- The "Environment" is the App: There was no distinction between the operating system and the development environment (REPL).

The Lisp Machine eventually failed because general-purpose CPUs (Intel x86) became fast enough to run Lisp in software ("Moore's Law beat the specialist") [5]. However, in late 2025, we are reaching the physical limits of general-purpose computing for Transformers.

III. The Rise of the "Gemini Machine"

Just as Symbolics built hardware for Lisp, Google is now building hardware for Gemini. The 2025 court ruling has accelerated the deployment of "Inference-Native" devices, specifically the Pixel 10 (Tensor G5), Chromebook Plus, and Project Aura (Android XR) [6].

A. The Tensor G5: The New "Tag Bit"

The Google Tensor G5 chip, fabricated on a 3nm process, is not designed to win Geekbench scores against Apple’s A-series chips. It is designed for matrix multiplication density. 8 The chip features a TPU (Tensor Processing Unit) that is 60% more powerful than its predecessor, specifically tuned to run "Gemini Nano" locally [7]9.

Parallel: Just as the Lisp Machine had hardware support for "car" and "cdr" operations, the Tensor G5 has hardware pathways optimized for the specific sparsity and quantization of the Gemini model.

B. The OS as "Context Window"

The most profound architectural shift is in ChromeOS and Android XR. On a standard computer, "memory" is a place to store files. On a Lisp Machine—and now a Gemini Machine—memory is Context.

The Feature:

The new Chromebook Plus integrates Gemini into the OS kernel. 10 The "Context Window" (up to 1 million tokens) effectively acts as the machine's RAM [8]11. The AI "sees" what you are doing across all tabs and apps simultaneously. 12

The Lock-in:

By prohibiting the bundling of Gemini on third-party devices, the court has forced Google to make this deep integration exclusive to its own hardware. You cannot "download" this OS-level context awareness onto a Windows PC; it requires the proprietary handshake between the Tensor chip and the ChromeOS kernel.

C. Project Aura: The Post-App Interface

The court ruling essentially regulates "Apps" (Search, Chrome). Google's answer is Project Aura (Android XR glasses), which eliminates the concept of apps entirely [9].

Agent-Based UI:

On these glasses, the user does not open a "Search" app (which would be subject to the court's choice screen). The user simply looks at an object and asks a question. The "Agent" (Gemini) answers.

Regulatory Bypass:

Because there is no "default search engine" setting—only a singular AI voice—the device sidesteps the annual auction mandate. It is a closed loop, similar to the Symbolics environment of 1982.

IV. Conclusion: The Divergence of 2026

The 2025 ruling was intended to open the market, but it may ironically bifurcate it.

The "Open" Market:

iPhones and Samsung devices will become the "General Purpose" computers of the era—neutral platforms where users manually choose between ChatGPT, Claude, and Gemini every 12 months.

The "Closed" Market:

Google's own devices will become "Gemini Machines"—specialized, vertically integrated appliances where the AI is not a choice, but the substrate of the computing experience.

History suggests that specialized hardware (Lisp Machines) eventually loses to general-purpose scale. However, unlike Symbolics, Google has the capital to sustain this divergence until the "Gemini Machine" becomes the superior form factor.

Footnotes

References

- Mehta, A. (2025). United States v. Google LLC, Remedial Order (Dec. 5, 2025). U.S. District Court for the District of Columbia.

- Times of India. (2025, Dec 6). "Court orders Google to limit default search and AI app deals to one year."

- Social Samosa. (2025, Dec 8). "Court orders new limits on Google’s search and AI deals."

- Greenblatt, R. et al. (1984). "The LISP Machine." Interactive Programming Environments. McGraw-Hill.

- Gabriel, R. P. (1991). "Lisp: Good News, Bad News, How to Win Big." AI Expert.

- Google Blog. (2025, Aug 20). "Pixel 10 introduces new chip, Tensor G5." The Keyword.

- Wccftech. (2025, Oct 12). "The Architecture Of Google's Tensor G5 Chip."

- Data Studios. (2025, Nov 1). "Google Gemini: Context Window, Token Limits, and Memory in 2025."

- UploadVR. (2025, Dec 8). "First Image & Clip Of Xreal's Project Aura Android XR Device Revealed."

-

This refers to footnote 1, related to United States v. Google LLC. ↩

-

This refers to footnote 2, related to the one-year duration for default search agreements. ↩

-

This refers to footnote 3, related to the ban on tying generative AI products. ↩

-

This refers to footnote 4, related to Judge Mehta's "competitive reset" goal. ↩

-

This refers to footnote 5, related to Lisp being too resource-intensive for commodity hardware. ↩

-

This refers to footnote 6, related to the Lisp Machine commercialization. ↩

-

This refers to footnote 7, related to hardware-assisted garbage collection. ↩

-

This refers to footnote 8, related to the Tensor G5's matrix multiplication density. ↩

-

This refers to footnote 9, related to the TPU being tuned for Gemini Nano. ↩

-

This refers to footnote 10, related to Chromebook Plus integrating Gemini into the OS kernel. ↩

-

This refers to footnote 11, related to the Context Window acting as RAM. ↩

-

This refers to footnote 12, related to AI seeing across all tabs and apps. ↩