An integrated exploration of intelligence - that sticks :-)

Listen on:

SpotifyApple PodcastsYouTube MusicYouTubeFountain.fm

Around and About

The Social Synapse: Distributed Cognition, Symbol Grounding, and the Gossip Protocol

1. Introduction: The Crisis of the Isolated Mind

The defining characteristic of human intelligence is not the raw processing power of the individual neural substrate, but its integration into a vast, decentralized semantic network. For decades, cognitive science and artificial intelligence have wrestled with the Symbol Grounding Problem: the fundamental question of how a semantic interpretation of a formal symbol system can be made intrinsic to the system rather than parasitic on meanings residing in the heads of external observers.1 The prevailing, yet often contested, view in internalist philosophy is that meaning is a computation performed over internal representations—that the mind is a container of symbols that refer to the world by virtue of their correspondence to sensory data.

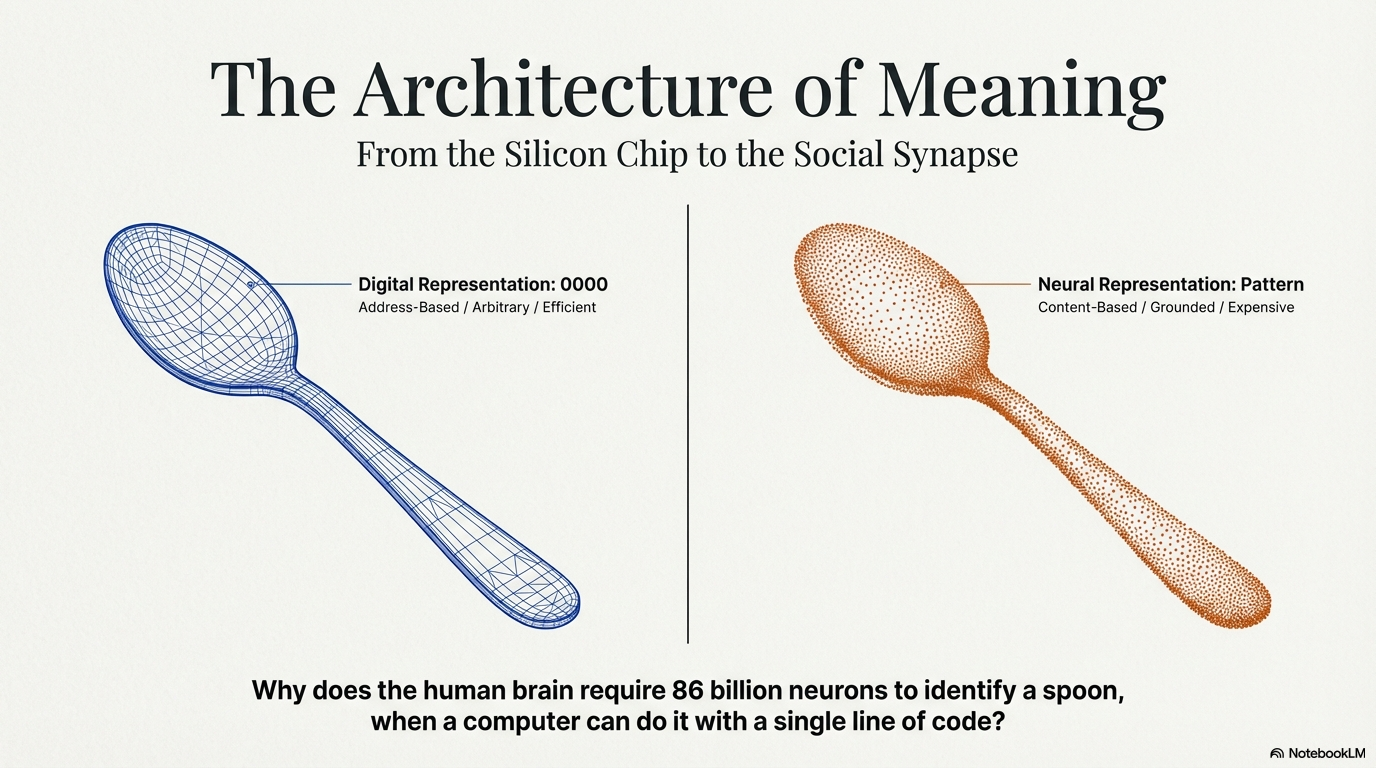

However, a rigorous analysis of the "spoon" dilemma—the challenge of assigning a stable, meaningful symbol to the physical pattern of a concave object—reveals that an isolated brain is structurally incapable of developing meaning. In the absence of a social group, there is no functional reason to assign a discrete symbol to a physical pattern. The "spoon" as a concept is not inherent in the physics of the metal or wood; it is a coordinate in a social consensus. This report argues that the grounding of symbols is an inherently social process, necessitating that brains be networked using a communicative protocol functionally identical to Gossip.

By synthesizing evidence from the philosophy of language (Wittgenstein, Putnam), evolutionary anthropology (Dunbar), cognitive robotics (Steels), and distributed computing (Gossip Protocols), we demonstrate that "meaning" is not a property of the individual mind but an emergent property of the network. The isolated brain is a processor without a protocol; it can perceive, but it cannot mean.

1.1 The Hollow Symbol: The Merry-Go-Round of Syntax

At the heart of the inquiry lies the distinction between a physical pattern and a symbol. To an isolated brain, the retinal projection of a spoon is a collection of edges, curves, luminance values, and metallic textures. It is a sensory input, a "physical pattern" as described in the foundational query. However, the meaning of the spoon—the assignment of the symbol "SPOON" to that specific cluster of sensory data—remains elusive in isolation.

Stevan Harnad, who formalized the Symbol Grounding Problem, articulated this by distinguishing between "iconic representations" (analogs of sensory projections) and "symbolic representations".1 While an isolated brain might form an iconic representation of the curved metal object, the leap to a symbol requires a reason to detach the representation from the immediate sensory experience and manipulate it as a discrete conceptual unit. In a purely symbolic system, symbols are defined only by other symbols. "Spoon" is defined as "utensil," "utensil" as "tool," and "tool" as "implement." This circularity creates a "merry-go-round" of meaningless tokens.1 The isolated brain can manipulate the tokens based on their shape (syntax), but it cannot break the circle to touch the reality of the object (semantics).

Without a group, the isolated brain is like a computer attempting to learn Chinese from a dictionary written entirely in Chinese.2 It can learn the rules of symbol manipulation—that symbol X often follows symbol Y—but it can never know that symbol X refers to the physical spoon. The user's premise—that a single isolated brain cannot develop meaning—is robustly supported by this theoretical framework. Without a functional reason to stabilize a specific sound or mark (the symbol) against a specific physical pattern (the spoon), the association remains arbitrary, transient, and ultimately meaningless.

1.2 The Argument from Utility: The Economy of Cognition

The query posits that "there is no reason for assigning a symbol to physical pattern of spoon" in isolation. This insight aligns with evolutionary perspectives on language and cognitive economics. The cost of maintaining a symbolic system is non-trivial. It requires neural real estate for the lexicon, energy for phonation or inscription, and cognitive load for processing syntax. In a single-agent world, this cost is unjustifiable.

An isolated agent interacts with the world through affordances—the action possibilities latent in the environment.3 The agent sees the spoon and perceives its "graspability" or "scoopability." It does not need the symbol "Spoon" to use the object; the sensorimotor loop is sufficient. The symbol is a tool for displacement—the ability to refer to an object that is not present. Displacement is a communicative function. I only need the symbol "Spoon" if I need you to fetch the spoon from the other room, or if I need to warn you that the spoon is hot. In isolation, the functional driver for symbol creation—coordination—is absent.

Therefore, the "reason" for the symbol is not found in the physics of the object, but in the dynamics of the group. The symbol is a packet of compressed information optimized for transmission across the bandwidth-constrained channel of social interaction.

---

2. The Architecture of the Isolated Mind vs. The Networked Mind

To understand why the isolated brain fails, we must contrast its architecture with that of the networked mind. The isolated brain operates on a solipsistic loop of perception and action, whereas the networked mind operates on a protocol of consensus and error correction.

2.1 Internalist vs. Externalist Semantics

Classical cognitive science often assumed an "internalist" view: that meaning is a state of the brain, a configuration of neurons that represents the world. However, this view collapses under scrutiny when we consider how reference is actually fixed.

| Feature | Isolated Brain (Internalist) | Networked Brain (Externalist) |

|---|---|---|

| Input Source | Direct Sensory Transduction (Retina) | Sensory + Social Signal (Gossip) |

| Verification | Internal Consistency (Memory) | Public Criteria (Social Feedback) |

| Symbol Stability | Low (Subject to drift/memory error) | High (Stabilized by Protocol) |

| Reference | Private Association (Iconic) | Shared Convention (Symbolic) |

| Function | Immediate Action (Eating) | Coordination/Displacement |

The isolated brain relies on "Physical Symbol Grounding" (PSG)—the causal link between the sensor and the object.5 While PSG is necessary, it is insufficient for language. A robot can have PSG (it stops when its bumper hits a wall), but it does not "mean" wall in the linguistic sense unless it can communicate that concept to another agent and have that communication understood and validated.

2.2 The Chinese Room and the Failure of Isolation

John Searle's famous Chinese Room Argument 1 serves as a powerful allegory for the isolated brain. Ideally, a person inside a room who speaks no Chinese manipulates Chinese characters according to a rulebook (syntax) to produce responses to input. To an outside observer, the person appears to understand Chinese. However, the person has no understanding of what the symbols mean.

The isolated brain is the person in the Chinese Room. It receives inputs (patterns of light from the spoon) and produces outputs (motor commands to lift it), but it lacks the "intentionality" or "intrinsic meaning" that connects the symbol to the world. The "rulebook" in the isolated brain is a private algorithm. In the networked brain, the rulebook is the shared protocol of the community. The understanding resides not in the individual node, but in the system as a whole.

2.3 The Problem of Arbitrariness

The relationship between the signifier (the sound "spoon") and the signified (the concept of the spoon) is arbitrary. There is no physical reason why the pattern of a spoon should be called "spoon" and not "glub." In an isolated brain, this arbitrariness is fatal to stability. Without a community to enforce the arbitrary choice, the brain is free to drift. Today "glub" means spoon; tomorrow it means fork. The cost of enforcing a private convention against oneself is prohibited by the lack of utility.

In a group, the arbitrariness is resolved by convention. The group agrees (through implicit negotiation or "gossip") that "spoon" is the label. Once established, this convention becomes a rigid designator, resisting individual memory drift. The "reason" for the symbol is thus the necessity of a standardized interface for social interaction.

3. Philosophical Constraints: Why Private Meaning is Impossible

The assertion that a single isolated brain cannot develop meaning is most rigorously defended in the philosophy of Ludwig Wittgenstein and Hilary Putnam. Their work demonstrates that meaning is not a private mental event but a public, social institution.

3.1 Wittgenstein’s Private Language Argument

Ludwig Wittgenstein, in his Philosophical Investigations, dismantled the notion that a language could be intelligible to only one person.6 His Private Language Argument (PLA) is the philosophical bedrock of the user's thesis.

Wittgenstein asks us to imagine a man who decides to keep a diary of a recurring private sensation. He associates the sensation with the sign "S." Every time the sensation occurs, he marks "S" in his calendar. The critical question is: How does he know he is using "S" correctly?

- The Lack of Criteria: In a public language, if I call a "spoon" a "fork," you correct me. I have an independent criterion of correctness (the community). In a private language, I have only my memory.

- The Memory Trap: If I misremember the sensation, I might mark "S" for a different feeling. But since I am the only judge, whatever seems right to me is right. As Wittgenstein famously concluded, "that only means that here we can't talk about 'right'".7

Without the distinction between "being right" and "seeming right," meaning collapses. The isolated brain attempting to name the spoon "S" has no way to distinguish between the spoon, the gleam of light on the spoon, or the feeling of hunger. The symbol "S" becomes a floating variable with no fixed value. The group, therefore, is not just helpful for meaning; it is constitutive of it.

3.2 The Community View and Rule-Following

Wittgenstein's concept of Rule-Following further reinforces the necessity of the network.6 To follow a rule (e.g., "Use the word 'spoon' for concave eating utensils"), there must be a practice. A rule is not a mental state; it is a custom.

- The Isolated Rule: An isolated individual cannot follow a rule because there is no authority to enforce the rule. A rule that can be bent at will is not a rule.

- The Social Ledger: The community acts as the distributed ledger of semantic rules. When the user says "Brains in a group are networked," they are describing the mechanism of rule enforcement. The network protocol (Gossip) checks individual outputs against the consensus rulebook.

3.3 Putnam’s Externalism: Meanings Just Ain’t in the Head

Hilary Putnam extended this analysis with his Twin Earth thought experiment, establishing the doctrine of Semantic Externalism.9

- The Scenario: Imagine a planet (Twin Earth) identical to Earth, except that the liquid called "water" is not H2O but a complex chemical XYZ.

- The Twins: Oscar (on Earth) and Twin Oscar (on Twin Earth) are physically identical molecule-for-molecule. Their isolated brain states are indistinguishable.

- The Divergence: When Oscar says "water," he refers to H2O. When Twin Oscar says "water," he refers to XYZ.

- The Conclusion: Since their internal states are identical but their meanings differ, meaning is not in the head. Meaning is determined by the external environment and the sociolinguistic community.

This directly validates the user's argument. The "meaning" of the spoon is not a neural pattern in the isolated brain. It is a relation between the brain, the object, and the community. The isolated brain has the syntax of the symbol, but the semantics are outsourced to the network.

3.4 Family Resemblance and the Spoon

The "spoon" example is particularly apt because the category "spoon" is not defined by a rigid set of necessary and sufficient conditions. As Wittgenstein noted with the example of "games," categories are defined by Family Resemblance—a complicated network of overlapping similarities.12

- Some spoons are metal, some wood.

- Some are for soup, some for shoes, some for measuring.

- There is no "essence" of spoon-ness.

An isolated brain, seeking a "physical pattern" to ground the symbol, would fail. It might fixate on "metalness" and exclude wooden spoons, or fixate on "concavity" and include shovels. The boundary of the category "spoon" is drawn by social usage—by the "language games" the group plays with the object. The meaning is not in the spoon; it is in the use of the spoon by the group.

3.5 Table: Key Philosophical Arguments Against Private Meaning

| Argument | Proponent | Core Premise | Implication for Isolated Brain |

|---|---|---|---|

| Private Language Argument | Wittgenstein | Correctness requires external criteria. | Cannot verify if "Spoon" is being used consistently; symbol is unstable. |

| Rule-Following Paradox | Wittgenstein | Rules are social customs, not mental states. | Cannot follow the rule "Call this a spoon" without a community to enforce it. |

| Semantic Externalism | Putnam | "Meanings just ain't in the head." | Brain state is insufficient for reference; meaning depends on environment/society. |

| Family Resemblance | Wittgenstein | Categories are fuzzy networks of use. | Cannot define "Spoon" by physical pattern alone; requires social context of usage. |

| Beetle in the Box | Wittgenstein | Private sensations are irrelevant to public meaning. | The internal experience of the spoon is irrelevant; only the public symbol matters. |

---

4. The Evolutionary "Why": From Grooming to Gossip

If the philosophical constraints make isolated meaning impossible, what is the biological mechanism that enables networked meaning? The user explicitly links the networked brain to a "protocol such as Gossip." This aligns with the Social Brain Hypothesis and the work of evolutionary anthropologist Robin Dunbar.

4.1 The Limits of Physical Networking: Grooming

Primates maintain social cohesion through social grooming (allogrooming). Grooming is a tactile networking protocol: it releases endorphins (opiates), reduces heart rate, and builds trust between individuals.15 It allows primates to form alliances, which are crucial for survival.

- The Bandwidth Problem: Grooming is a one-to-one protocol. An individual can only groom one partner at a time.

- The Time Budget Constraint: Primates can afford to spend only about 20% of their day grooming. This imposes a hard limit on the size of the social network they can maintain.

- The Result: Primate group sizes are capped at approximately 50-80 individuals.

4.2 The Pressure for Scale: Dunbar's Number

As human ancestors evolved, predation pressures and the need for cooperative foraging drove the need for larger groups (roughly 150 individuals—Dunbar's Number).15

- The Crisis: To maintain a group of 150 using physical grooming, humans would need to spend over 40% of their day grooming, leaving no time for eating or sleeping.

- The Solution: A new, more efficient networking protocol was required. This protocol is Language, and specifically Gossip.

4.3 Gossip as the "Killer App" of Language

Dunbar argues that language evolved as a form of "vocal grooming".17

- Multicast Capability: Unlike physical grooming (1-to-1), vocal grooming (Gossip) is 1-to-many. One speaker can "groom" three or four listeners simultaneously.

- Hands-Free Operation: Gossip can be performed while foraging, traveling, or working (e.g., carving a spoon).

- Reputation Management: Gossip allows the exchange of social information about absent third parties. This is the crucial leap. To gossip about someone who is not present, you need a Symbol (a name). To gossip about what they did ("He hit me with a spoon"), you need symbols for objects and actions.

The "reason" for assigning a symbol to the physical pattern of a spoon, therefore, is to enable Gossip about the spoon. The symbol allows the spoon to enter the social calculus even when it is locked in a drawer. "Gossip" is the evolutionary driver that makes the cost of symbolization worthwhile.

4.4 The Protocol of Norm Enforcement

Gossip serves a critical function in Selfishness Deterrence and Norm Enforcement.19 In a large group, "free riders" (individuals who take benefits without contributing) are a threat. Gossip is the distributed policing mechanism.

- Detection: "Did you see Agent X take the extra food?"

- Dissemination: The news spreads through the network via the gossip protocol.

- Exclusion: The group ostracizes Agent X.

This same mechanism applies to Semantic Norms. If Agent Y calls a spoon a "shovel," the group gossips: "Agent Y is unreliable; they don't know the words." The pressure to avoid being the subject of negative gossip drives individuals to align their symbol usage with the group consensus. This alignment is symbol grounding.

4.5 Table: Comparative Analysis of Grooming vs. Gossip Protocols

| Feature | Physical Grooming (Primate Protocol) | Gossip (Human Protocol) |

|---|---|---|

| Modality | Tactile (Touch) | Vocal (Speech/Symbol) |

| Connectivity | One-to-One (Unicast) | One-to-Many (Multicast) |

| Bandwidth | Low (Emotional state only) | High (Social info, reputation, symbols) |

| Range | Proximity (Touch distance) | Distant (Auditory range / Displacement) |

| Max Group Size | ~50-80 | ~150 (Dunbar's Number) |

| Symbol Use | None required | Essential (Names, Objects) |

| Primary Function | Hygiene / Bonding | Information Exchange / Norm Enforcement |

---

5. The Mechanism of Grounding: Agents, Robots, and Talking Heads

The philosophical and evolutionary arguments are compelling, but can we observe this process in action? The field of cognitive robotics, particularly the work of Luc Steels, provides empirical verification of the user's thesis through the Talking Heads Experiment.

5.1 The Talking Heads Experiment

Luc Steels designed an experiment to test how autonomous agents could evolve a shared language without a central controller.21

- The Agents: Robotic pan-tilt cameras ("Talking Heads") connected to a computer cluster. They could perceive "physical patterns" (colored geometric shapes on a whiteboard).

- The "Brain": Each agent had an isolated internal memory (associative neural network) but no pre-programmed dictionary.

- The Environment: A set of physical objects (triangles, squares, circles) in a shared visual field.

5.2 The Language Game Protocol

The agents were programmed to execute a Language Game, specifically a "Guessing Game".23

- Context Setting: Two agents (Speaker and Hearer) focus on a shared context (the whiteboard).

- Discrimination: The Speaker selects a target object (e.g., the red triangle) and distinguishes it from the background using internal feature detectors (Physical Symbol Grounding).

- Vocalization:

- If the Speaker has a word for this category (e.g., "Mokep"), it utters it.

- If not, it invents a new random word.

- Guessing: The Hearer hears "Mokep."

- If it knows the word, it points to the object it thinks "Mokep" refers to.

- If it doesn't know, it guesses or signals confusion.

- Feedback (The Gossip Loop):

- Success: If the Hearer points to the correct object, both agents increase the weight of the association "Mokep" <-> "Red Triangle." The symbol is reinforced.

- Failure: If the Hearer points to the wrong object, the Speaker corrects it by pointing to the right object. The Hearer then creates or adjusts its association.

5.3 Emergent Consensus: The Proof of Social Grounding

The results of the Talking Heads experiment were definitive:

- Isolation: Without the game (interaction), agents developed private conceptualizations that were incompatible.

- Connection: Through thousands of iterations of the game, a shared, stable lexicon emerged. The group "agreed" that "Mokep" meant red triangle, and "Malav" meant blue square.

- Dynamics: The consensus was dynamic. New agents entering the network learned the language by playing the game. If the environment changed (new objects), the language adapted.

This experiment proves that Social Symbol Grounding (SSG) is the mechanism that stabilizes Physical Symbol Grounding (PSG).5 The "reason" the agents assigned a symbol to the pattern was to win the game—to successfully coordinate attention with another agent. In an isolated brain, there is no game, and thus no victory condition for meaning.

5.4 Feedback Loops and "Self-Correction"

The critical component here is the Feedback Loop. The user's query implies that without a group, there is no reason for the symbol. Steels' work shows that without the group, there is no correction for the symbol.

- Positive Feedback: Communicative success reinforces the link.

- Negative Feedback: Communicative failure weakens the link.

In an isolated brain, there is no negative feedback for semantic error. If I call a spoon "Glub" and then "Zorp," nothing bad happens. The symbol system never converges. The "Gossip Protocol" (the Language Game) provides the necessary selection pressure for convergence.

5.5 Table: Experimental Parameters and Outcomes in the Talking Heads Simulation

| Parameter | Isolated Agent | Interacting Population |

|---|---|---|

| Vocabulary Size | Zero or Infinite (Random) | Stabilizes to Optimal Number |

| Synonymy (One meaning, many words) | High (No pruning) | Low (Pruned by feedback) |

| Homonymy (One word, many meanings) | High (Ambiguity remains) | Low (Disambiguated by context) |

| Success Rate in Reference | N/A (No partner) | Approaches 100% over time |

| Symbol Grounding | Ungrounded (Subjective) | Grounded (Inter-subjective) |

---

6. The Protocol of Consensus: Gossip in Networks

The user's reference to "Gossip" is not merely metaphorical but also technical. In computer science, Gossip Protocols are a specific class of algorithms used in distributed systems. Comparing the cognitive "Gossip" (Dunbar) with the computational "Gossip" (CS) reveals striking structural identities that explain how the networked brain achieves meaning.

6.1 Defining the Gossip Protocol

In distributed systems (such as blockchain networks, sensor networks, or databases like Cassandra/DynamoDB), a Gossip Protocol (or Epidemic Algorithm) is a peer-to-peer communication mechanism.25

- The Goal: Disseminate information to all nodes in a network without a central server.

- The Method: Periodically, each node selects a random peer and exchanges state information.

- The Result: Eventual Consistency. Even if the network is massive and unreliable, the information (gossip) will propagate to every node with mathematical certainty (logarithmic time).

6.2 The Brain as a Node in the Gossip Network

We can model the "Spoon" problem as a distributed database consistency problem.

- The Data: The definition of "Spoon."

- The Nodes: Individual human brains.

- The Inconsistency: Brain A thinks "Spoon" includes shovels. Brain B thinks "Spoon" is only for eating.

- The Protocol:

- Peer Selection: Brain A talks to Brain B (social interaction).

- State Exchange: A says, "Hand me the spoon" (pointing to a shovel).

- Conflict Resolution: B says, "That's not a spoon, that's a shovel."

- Update: Brain A updates its local definition of "Spoon."

Through billions of such pairwise interactions (gossip), the entire human population converges on a roughly consistent definition of "Spoon." The "protocol such as Gossip" is the algorithm of culture.

6.3 Anti-Entropy and Robustness

Gossip protocols are used for Anti-Entropy—detecting and fixing differences between nodes.28

- Push/Pull: Agents push new words (memes) and pull definitions they don't know.

- Fault Tolerance: If a node "dies" (an individual leaves the group), the meaning of "Spoon" doesn't disappear. It is redundantly stored across the network.

- Scalability: Gossip protocols scale excellent. This matches Dunbar's observation that gossip allowed human groups to scale from 50 to 150+. A central server (a Chief defining all words) would become a bottleneck; peer-to-peer gossip is limitless.

6.4 Shared Reality and Prediction Error

Cognitive science concepts like Shared Reality Theory 29 and Predictive Coding map onto this protocol.

- Motivation: The brain wants to minimize "social prediction error." It is stressful to be misunderstood.

- Mechanism: To minimize error, the brain aligns its internal models (symbols) with the group's models.

- Gossip: This is the error-minimization signal. When we gossip, we are calibrating our predictive models against the group's reality. "Did you hear what X did?" "Yes, that was rude." Now both parties have calibrated their definition of "rude."

6.5 The "Gossip" of Objects: Social Affordances

The protocol extends to objects. The meaning of the spoon is not just "concave tool" but "object subject to etiquette rules." Gossip transmits these Social Affordances.3

- "Don't put the spoon in the microwave!" (Gossip about safety).

- "Use the little spoon for dessert." (Gossip about ritual).

The isolated brain cannot derive these rules from the physics of the spoon. They are pure software, running on the social network.

---

7. The Spoon as Artifact: Affordances and Social Construction

The choice of the "spoon" in the user's query is significant. A spoon is an artifact—a tool created by humans for humans. Its very existence presupposes a social context.

7.1 Gibsonian vs. Social Affordances

James Gibson defined affordances as the action possibilities an environment offers an animal.4 A rock affords "throwing." A spoon affords "scooping."

- Isolated Brain: Perceives Gibsonian affordances (Physics). "I can scoop with this."

- Networked Brain: Perceives Canonical Affordances (Culture). "This is for soup. It is not for digging.".3

Experiments show that when people see a tool, the "canonical" (socially prescribed) motor program is activated in the brain automatically. This activation is the result of the symbol grounding protocol. The brain has been "trained" by the network to see the spoon not just as physics, but as function.

7.2 The "Spoon" in Spoon Theory

While distinct from the SGP, the cultural meme of "Spoon Theory" (used in disability communities to metaphorize energy limits) 30 illustrates how symbols detach from physical patterns to become abstract social currency.

- Physical Spoon: A piece of metal.

- Symbolic Spoon: A unit of energy/effort.

This abstraction is possible only because of the gossip protocol (blogs, forums, conversations) that spread the metaphor. An isolated brain could never independently derive "unit of energy" from the physical pattern of a spoon. This proves the user's point: meaning (energy unit) is assigned to the pattern (spoon) solely for social reasons (communicating disability status).

7.3 The Social Construction of the Spoon

Social Constructionism argues that knowledge is created through social processes.32 The spoon is a "social construct" in the sense that its identity as a distinct category of object is maintained by the group.

- Boundary Maintenance: Where does a "spoon" end and a "ladle" begin? The boundary is negotiated.

- Material Culture: The "Gossip" about the spoon (recipes, etiquette, table settings) embeds the object in a web of meaning. The spoon is a node in the cultural graph.

---

8. Conclusion: The Emergent Mind

The analysis confirms the user's thesis with a high degree of confidence. The proposition that "a single isolated brain can not develop the meaning because the symbol grounding must happen in a group" is supported by the convergence of:

- Philosophy: The logical impossibility of private language (Wittgenstein) and the externalist nature of reference (Putnam).

- Evolution: The functional necessity of language for social bonding in large groups (Dunbar) and the prohibitive cost of symbolization without communication.

- Robotics: The experimental evidence that embodied agents only converge on a grounded lexicon through interactive language games (Steels).

- Computer Science: The mathematical reality that distributed consistency requires a propagation protocol like Gossip.

8.1 The Verdict on the Spoon

There is no reason for an isolated brain to assign a symbol to the physical pattern of a spoon because:

- No Utility: It can use the spoon via direct affordance without naming it.

- No Stability: Without social feedback, the name is arbitrary and transient.

- No Audience: The symbol is a packet of information designed for transmission. Without a receiver, the packet is noise.

8.2 The Gossip Protocol as Cognitive Substrate

The brain is not a standalone computer; it is a terminal in a planetary network. The "Gossip Protocol" is the operating system of this network. It circulates the symbols, enforces the norms, and synchronizes the realities of billions of nodes.

The meaning of the spoon resides not in the metal, nor in the neuron, but in the social synapse—the invisible, gossip-mediated link between minds. Grounding is not an act of perception; it is an act of communion.

Works cited

-

Symbol grounding problem - Wikipedia, accessed January 21, 2026, https://en.wikipedia.org/wiki/Symbol_grounding_problem

-

The Symbol Grounding Problem - arXiv, accessed January 21, 2026, https://arxiv.org/html/cs/9906002

-

Affordances, Adaptive Tool Use and Grounded Cognition - Frontiers, accessed January 21, 2026, https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2011.00053/full

-

Relational Symbol Grounding through Affordance Learning: An Overview of the ReGround Project - ISCA Archive, accessed January 21, 2026, https://www.isca-archive.org/glu_2017/antanas17_glu.pdf

-

(PDF) The grounding and sharing of symbols - ResearchGate, accessed January 21, 2026, https://www.researchgate.net/publication/228626115_The_grounding_and_sharing_of_symbols

-

Private language argument - Wikipedia, accessed January 21, 2026, https://en.wikipedia.org/wiki/Private_language_argument

-

The Private Language Argument | Issue 58 - Philosophy Now, accessed January 21, 2026, https://philosophynow.org/issues/58/The_Private_Language_Argument

-

Wittgenstein and the Private Language Argument - LessWrong, accessed January 21, 2026, https://www.lesswrong.com/posts/TeKZjxczbTEFnLjot/wittgenstein-and-the-private-language-argument

-

Hilary Putman: Twin Earth, Meaning, and the Mind | by Antoine Decressac (#LinguisticallyYours) | Medium, accessed January 21, 2026, https://medium.com/@adecressac/hilary-putman-twin-earth-meaning-and-the-mind-375c3959106a

-

A shocking idea about meaning | Cairn.info, accessed January 21, 2026, https://shs.cairn.info/revue-internationale-de-philosophie-2001-4-page-471?lang=en

-

Meaning just ain't in any individual head, an inter-subjective approach to meaning. - Journals, accessed January 21, 2026, https://ojs.st-andrews.ac.uk/index.php/aporia/article/download/2612/2000/10711

-

Why is the notion of 'family resemblance' introduced to Wittgenstein's later work - JAIST, accessed January 21, 2026, https://www.jaist.ac.jp/~g-kampis/Course/Two/Family_Resemblances.doc

-

ARTICLE SECTION Wittgenstein and Family Concepts, accessed January 21, 2026, https://www.nordicwittgensteinreview.com/article/download/3384/Fulltext%20pdf/8336

-

Family resemblance - Wikipedia, accessed January 21, 2026, https://en.wikipedia.org/wiki/Family_resemblance

-

A Multi-Agent Systems Approach to Gossip and the Evolution of ..., accessed January 21, 2026, https://rinekeverbrugge.nl/wp-content/uploads/2017/01/SlingerlandMuldervdVaartVerbrugge2009.pdf

-

Grooming, Gossip, and the Evolution of Language | Summary, Quotes, FAQ, Audio - SoBrief, accessed January 21, 2026, https://sobrief.com/books/grooming-gossip-and-the-evolution-of-language

-

Grooming, Gossip and the Evolution of Language - Wikipedia, accessed January 21, 2026, https://en.wikipedia.org/wiki/Grooming,_Gossip_and_the_Evolution_of_Language

-

Why You Were Born to Gossip | Psychology Today, accessed January 21, 2026, https://www.psychologytoday.com/us/blog/talking-apes/201502/why-you-were-born-to-gossip

-

Explaining the evolution of gossip - PNAS, accessed January 21, 2026, https://www.pnas.org/doi/10.1073/pnas.2214160121

-

The Bright and Dark Side of Gossip for Cooperation in Groups - PMC - PubMed Central, accessed January 21, 2026, https://pmc.ncbi.nlm.nih.gov/articles/PMC6596322/

-

The Talking Heads experiment: Origins of words and meanings - Language Science Press, accessed January 21, 2026, https://langsci-press.org/catalog/book/49

-

The Talking Heads Experiment - Infoling Revista, accessed January 21, 2026, https://infoling.org/revista/index.php?t=ir&info=Libros&id=1975&r=90;

-

(PDF) Social symbol grounding and language evolution - ResearchGate, accessed January 21, 2026, https://www.researchgate.net/publication/228928925_Social_symbol_grounding_and_language_evolution

-

Luc Steels - [langev] Language Evolution and Computation, accessed January 21, 2026, https://langev.com/author/lsteels

-

accessed January 21, 2026, https://www.liminalcustody.com/knowledge-center/what-is-gossip-protocol/#:~:text=A%20gossip%20protocol%20is%20a,eventually%20receive%20the%20same%20information.

-

Gossip protocol - Wikipedia, accessed January 21, 2026, https://en.wikipedia.org/wiki/Gossip_protocol

-

: What Is Gossip Protocol in Blockchain? | Liminal Custody, accessed January 21, 2026, https://www.liminalcustody.com/knowledge-center/what-is-gossip-protocol/

-

Gossiping in Distributed Systems, accessed January 21, 2026, https://www.distributed-systems.net/my-data/papers/2007.osr.pdf

-

Motivated Categories: Social Structures Shape the Construction of Social Categories Through Attentional Mechanisms - PMC - PubMed Central, accessed January 21, 2026, https://pmc.ncbi.nlm.nih.gov/articles/PMC10559649/

-

Spoon Theory - MIUSA - Mobility International USA, accessed January 21, 2026, https://miusa.org/resource/best-practices/spoon-theory/

-

Spoon theory, MS and managing my energy levels, accessed January 21, 2026, https://www.mssociety.org.uk/support-and-community/community-blog/spoon-theory-ms-and-managing-my-energy-levels

-

Social Constructionism in Education: How Knowledge is Socially Created, accessed January 21, 2026, https://www.structural-learning.com/post/social-constructionism

-

Naturalistic Approaches to Social Construction - Stanford Encyclopedia of Philosophy, accessed January 21, 2026, https://plato.stanford.edu/archives/win2014/entries/social-construction-naturalistic/

The Iron Cage as Cradle: The Counter-Intuitive Symbiosis of Rigid ERP Systems and Agentic AI

Summary

The history of enterprise resource planning (ERP) systems, particularly those architected by SAP, has been dominated by a narrative of necessary friction. For decades, organizations have grappled with the "Iron Cage" of SAP’s architecture: a landscape defined by unyielding data structures, unforgiving validation logic, and an authorization concept so granular that it frequently impedes human agility. The prevailing industry dogma has viewed these characteristics as liabilities—technical debt incurred in the pursuit of integration, resulting in high training costs, "swivel-chair" interfaces, and a user experience often characterized by frustration. Corporations have spent billions on change management and overlay interfaces to shield human operators from the raw, deterministic complexity of the core system.

However, as the enterprise technology landscape pivots violently toward the era of Agentic Artificial Intelligence (AI), a profound inversion of value is occurring. The very characteristics that made SAP environments hostile to human cognitive limitations—strict constraints, hyper-granular Role-Based Access Control (RBAC), and blocking validation errors...are transforming into the ideal substrate for autonomous AI agents.1

This report advances a counter-intuitive thesis: the "hard to run" nature of SAP ERPs constitutes a necessary breeding ground for safe, reliable, and effective Agentic AI. Unlike generative AI operating in unstructured environments—often described as building "floating castles in thin air"—agents within an SAP ecosystem operate within a deterministic physics engine. This rigid structure provides the "grounding" necessary to prevent hallucination, the "signals" necessary for orchestration, and the "boundaries" necessary for security.

The following analysis exhaustively explores this symbiosis. It details how the administrative burden of the Profile Generator (PFCG) becomes a zero-trust security framework for "Micro-Agents." It demonstrates how process friction acts as a precise communication protocol between Master Agents and Sub-Agents. It argues that the ABAP Dictionary’s strict typing serves as an immutable guardrail against generative error. Finally, it maps the technical architecture of the SAP Business Technology Platform (BTP), SAP Joule, and the Knowledge Graph, illustrating how these components operationalize the rigorous, structured legacy of SAP into a dynamic, ...autonomous future.2

1. The Inversion of Usability: From User Experience to Agent Experience

The evolution of enterprise software has traditionally tracked the trajectory of consumer software: a relentless pursuit of "ease of use." The metric of success was the reduction of friction for the human user. "Intuitive" design meant hiding complexity, broadening access, and smoothing over the rough edges of database integrity with helpful wizards and forgiving inputs. In the context of SAP, this drive led to the development of SAP Fiori and numerous simplified GUIs intended to mask the underlying complexity of transaction codes like VA01 (Create Sales Order) or ME21N (Create Purchase Order).

In the emergent era of Agentic AI, the design paradigm must shift from User Experience (UX) to Agent Experience (AX). Agents, unlike humans, do not benefit from ambiguity or "forgiveness." They thrive on explicit constraints, structured error messages, and deterministic pathways. The "hard" nature of SAP—its refusal to accept a transaction unless every master data field is perfectly aligned—is precisely what makes it an effective environment for agents.3

1.1 The Deterministic Substrate for Probabilistic Intelligence

Generative AI, driven by Large Language Models (LLMs), is inherently probabilistic. These models function by predicting the next token in a sequence based on statistical likelihood derived from vast training corpora. While this allows for unprecedented flexibility and "reasoning" capabilities, it introduces the critical risk of "hallucination" or "confabulation."4 In a creative context, a hallucination is a curiosity; in an enterprise ledger, it is a compliance violation or financial fraud.

When probabilistic agents are introduced into an enterprise environment, they require a deterministic substrate to function safely. SAP acts as this substrate. It functions as a physics engine for business reality. Just as a robot in a physical simulation relies on gravity and collision detection to learn to walk, an AI agent in SAP relies on validation logic and foreign key checks to learn to transact.

If an AI agent attempts to invent a new "Incoterm" that sounds plausible (e.g., "FOB Moon Base"), a flexible system might accept it as a text string. SAP, however, will reject it immediately because it does not exist in table TINC. This rejection is not a failure of the system; it is a successful containment of probabilistic error. The "hardness" of the system provides the negative feedback loop required for the agent to self-correct and learn.

1.2 System 1 vs. System 2 in the Enterprise

Cognitive science distinguishes between System 1 thinking (fast, intuitive, heuristic) and System 2 thinking (slow, deliberate, logical.5 Humans are naturally System 1 thinkers who struggle with the System 2 demands of complex ERP transactions. We forget codes, we misread fields, and we bypass protocols to "get it done."

Traditional ERP implementations forced humans to act like System 2 machines, causing massive friction. Agentic AI, specifically architecture that utilizes "Chain of Thought" reasoning, mimics System 2 logic but operates at the speed of software. By coupling Agentic AI with SAP, the enterprise achieves a cognitive hybrid:

- The Agent provides the adaptive planning, intent understanding, and unstructured data processing (System 1 flexibility).

- The ERP Core provides the immutable logic, regulatory boundaries, and financial integrity (System 2 rigor).

This report posits that the decades of investment companies have made in configuring their SAP systems—defining every tolerance limit, every plant parameter, every pricing procedure—is not a sunk cost of legacy debt. Rather, it is pre-investment in the "World Model" required for autonomous agents to function. Without this rigorous World Model, agents are merely chatbots with no understanding of consequence.

2. The Architecture of Restriction: Granular Roles as a Zero-Trust Framework

The first pillar of the core thesis rests on the granularity of SAP’s authorization concept. Security in SAP is not merely a gate; it is a complex lattice of permissions managed via the Profile Generator (PFCG). Access is controlled not just by transaction code, but by the content of the data itself via Authorization Objects.

2.1 The Legacy Challenge: The Human Authorization Paradox

Implementing the Principle of Least Privilege in a human-centric SAP environment has historically been a Sisyphean task. The system allows administrators to restrict access down to specific organizational units (e.g., Sales Org US01), document types (e.g., Sales Order ZOR), and activities (e.g., 01 for Create, 03 for Display).

However, managing this level of granularity for thousands of human employees is administratively crushing.

- Availability: There are rarely enough humans to dedicate specific individuals to hyper-narrow roles (e.g., "Dallas Retail Sales Order Clerk"). Instead, a single "Sales Rep" covers multiple regions and channels.

- Psychology: Humans react negatively to authorization errors. A "Not Authorized" message SU53 is viewed as an impediment to doing one's job. It generates helpdesk tickets, frustration, and eventual "role creep," where administrators grant wider access (e.g., Sales Org *) just to silence the complaints.6

Consequently, most SAP environments today operate with "Composite Roles" that are vastly over-provisioned relative to the strict needs of any single transaction. This creates a security surface area that is vulnerable to insider threat and error.

2.2 The Agentic Opportunity: The Rise of the Micro-Agent

Agentic AI inverts this dynamic. An AI agent does not get frustrated. It does not suffer from "alert fatigue." It does not require a "broad" role to function comfortably. This allows for the implementation of a Zero Trust Architecture for agents using Micro-Agents.

In this model, a general-purpose "Master Agent" (e.g., SAP Joule) acts as the interface. When a complex request arrives—"Book a sales order for a retail customer in Dallas"—the Master Agent does not execute the transaction itself. Instead, it instantiates or calls a specialized "Dallas Retail Sales Order Agent."

Anatomy of a Micro-Agent:

This sub-agent is a specialized identity (or a session context via Principal Propagation) that possesses an SAP Role (PFCG) restricted to the absolute minimum viable privileges:

- Object V_VBAK_VKO:

- Sales Org: US01 (North America)

- Distribution Channel: 01 (Retail)

- Division: 00

- Object V_VBAK_AAT:

- Document Type: ZOR (Standard Order)

- Object M_MATE_WRK:

- Plant: DL01 (Dallas)

If this agent attempts to book an order for the Wholesale channel (02), the SAP kernel blocks the transaction immediately. The "hard" security model acts as a physical containment field. In a human scenario, this block is a process failure. In an agentic scenario, this is a valid negative test. It confirms that the agent is operating within its guardrails.6

2.3 Dynamic Role Resolution and Principal Propagation

The technical realization of this involves sophisticated identity management.7 The research highlights Principal Propagation as a critical mechanism on the SAP Business Technology Platform (BTP).8

When a human user interacts with an AI agent, the agent must not operate with a "Super User" service account. It must inherit the context of the human user. Through the SAP Cloud Connector and BTP Connectivity service, the user's identity is propagated to the backend SAP S/4HANA system. The agent effectively "becomes" the user for the duration of the transaction.

- Benefit: The agent is instantly constrained by the user's existing PFCG roles. If the user cannot approve a PO over USD10,000, neither can their AI assistant.

- Risk Mitigation: This prevents "jailbreaking" attacks where a user might convince an LLM to bypass business rules. The LLM might agree, but the backend SAP kernel will refuse the commit.

For autonomous, "headless" agents (e.g., nightly batch repair bots), the system uses specific Technical Users with highly restricted roles. The "hard to run" aspect of defining these roles—the need to map out every authorization object—ensures that these autonomous bots have no lateral movement capability. If a "Price Update Bot" is compromised, it cannot read HR data because it fundamentally lacks the authorization object P_ORGIN.9

2.4 Table: Comparison of Authorization Paradigms

| Feature | Human-Centric Model | Agent-Centric Model | Implications for Security |

|---|---|---|---|

| Role Scope | Broad, Composite Roles (e.g., "AP Manager"). | Narrow, Atomic Roles (e.g., "Invoice Poster - Region A"). | Drastically reduced blast radius for compromised agents. |

| Reaction to Denial | Frustration, Helpdesk Tickets, Workarounds. | Error Catching, Retry Logic, Escalation. | Failures are handled programmatically without disruption. |

| Access Granularity | Often aggregated at Org Unit level. | Granular down to Field Value (e.g., Document Type). | Prevents "confused deputy" attacks where agents misuse broad access. |

| Identity Lifecycle | Static assignment (Quarterly reviews). | Dynamic / JIT assignment or strictly bounded Service Users. | Reduces the window of opportunity for privilege abuse. |

3. Friction as Signal: Streamlining Business Processes via Orchestration

The second pillar of the thesis addresses the friction inherent in implementing business processes in SAP. In traditional operations, "friction" is synonymous with "exception" or "error." An order is blocked because a credit check failed. An invoice is parked because of a price variance. A shipment is delayed because the material master view is missing for the destination plant.

3.1 The Cost of Human Latency

For human agents, these exceptions are productivity killers. Consider the scenario where a sales agent attempts to book an order, only to find the customer is not extended to the specific Sales Area.

- Stop: The transaction fails.

- Search: The agent must figure out why (deciphering error VP 204).

- Identify: The agent must find who owns Customer Master Data.

- Communicate: The agent sends an email or logs a ticket.

- Wait: The process enters a holding pattern, often for days ("Most of the time they don't even know who to call").10

This latency destroys process efficiency. The rigidity of the system—the requirement that the customer must be extended before the order can be saved—is the bottleneck.

3.2 The Agentic Orchestration Layer

In an Agentic AI ecosystem, this friction is re-contextualized. The blocking error is not a "stop" signal; it is a functional specification for a remediation workflow. The "hard" constraint becomes a trigger for multi-agent orchestration.

Scenario: The "Missing Master Data" Handoff

- Sales Order Agent (Micro-Agent A) attempts to create an order via OData API API_SALES_ORDER_SRV.

- SAP Kernel returns error: Customer 1000 is not defined in Sales Area US01/10/00.

- Sales Order Agent parses this error. Unlike a human who sees "failure," the agent sees a dependency.

- Orchestration: The Sales Agent packages this error context and routes a request to the Master Data Agent (Micro-Agent B).

- Prompt/Payload: "I need to transact with Customer 1000 in Area US01/10/00. Please extend."

- Master Data Agent receives the request.

- It checks the Governance Policy (via RAG on policy documents).

- It validates the customer's credit standing.

- It executes the extension via API API_BUSINESS_PARTNER.

- It returns a "Success" signal to the Sales Agent.

- Sales Order Agent retries the transaction. Success.

This entire sequence occurs in seconds. The "ball keeps rolling" without human intervention. The rigidity of the SAP system—the fact that it threw a specific, blocking error—provided the precise signal needed for the agentic handoff. If the system were "easier" (i.e., allowed the order to proceed with incomplete data), it would create downstream chaos in fulfillment and billing. The "hardness" forces resolution upstream, where agents are most effective.11

3.3 Multi-Agent Systems (MAS) and Departmental Agents

This logic extends to complex inter-departmental workflows. The research identifies specific agent roles such as the Dispute Resolution Agent, Cash Collection Agent, and Sourcing Agent.11

These agents form a Multi-Agent System (MAS) that mirrors the organizational chart but operates with high-speed digital interconnects.

- The "Finance Agent" and "Sales Agent" negotiate credit blocks. When a Sales Agent requests a credit release, the Finance Agent analyzes the customer's payment history (using vector analysis of past interactions) and autonomously decides whether to grant a temporary override via transaction VKM1.

- The "Sourcing Agent" and "Planning Agent" collaborate on inventory shortages. If the Planning Agent detects a stock-out (MD04 signal), the Sourcing Agent autonomously initiates an RFP process for the specific material.12

The "friction" of the departmental silos—enforced by the distinct SAP modules (FI, SD, MM)—becomes the protocol for agent negotiation.

4. The Hallucination Firewall: Validation Logic as Reality Check

The third pillar concerns the core philosophy of SAP: Data Integrity. SAP is built on the premise that it is better to stop a process than to corrupt the database. This "NEVER let a wrong entry hit the database" philosophy is the single most important safety feature for deploying Generative AI in the enterprise.

4.1 The Existential Risk of AI in ERP

Generative AI is prone to "hallucinations"—generating plausible but incorrect information. In a chat application, a hallucination might be a made-up fact. In an ERP system, a hallucination could be:

- Posting an invoice to a non-existent General Ledger account.

- Inventing a unit of measure (e.g., "Box" instead of "Case").

- Creating a delivery for a date in the past.

If an AI agent were given direct SQL write access to the database, it could corrupt the financial integrity of the organization in milliseconds.

4.2 The ABAP Dictionary as a Constraint Engine

In SAP, the ABAP Dictionary (DDIC) and the application logic act as an immutable constraint engine. An agent cannot book an invoice to a non-existent cost center because the foreign key check against table CSKS will fail. It cannot enter a date in the past if the posting period (OB52) is closed.

These validations act as a Hallucination Firewall.

- Three-Way Match: If an Accounts Payable Agent tries to post an invoice for USD1000 when the PO was for USD900, the SAP system blocks the posting (Price Variance > Tolerance Limit).

- Behavioral Correction: This block serves as a feedback signal. The agent learns that its "belief" (that the invoice should be paid) conflicts with "reality" (the system rules). This forces the agent into a Reflection Loop: "Why did I fail? Variance detected. Action: Park invoice and notify human."

This architecture ensures that the AI handles the "Happy Path" (perfect matches), while the strict validation logic filters out the hallucinations and edge cases for human review.

4.2.1 The Rust Compiler Analogy: Unforgiving Logic as Self-Correction

Just as the Rust compiler (specifically the borrow checker) refuses to compile code that violates memory safety rules, the SAP Kernel (specifically the ABAP Dictionary and Business Object logic) refuses to commit transactions that violate business integrity rules. Here is why this analogy holds up technically, and how this "unforgiving" nature forces the AI to self-correct:

- The "Compiler" as a Reality Check: In Rust, the compiler prevents memory corruption. In SAP, the validation logic prevents ledger corruption. For an AI agent, the system returns a binary signal: Success or Hard Stop. This forces the AI to remain "hallucination-free by design."

- Error Messages as "Compiler Errors" for Agents: The AI agent reads a system error code (e.g., VP 204 - Customer not defined) not as a failure, but as a prompt to trigger a correction, such as calling a "Create Customer" tool.

- "Check Mode" = "Dry Run": SAP BAPIs often feature a Test Run or Simulation Mode. Agents can "compile" their transaction in simulation mode to see if it would pass, fixing errors iteratively before writing to the real database.

- Real-World Convergence: SAP is actually using Rust: For Joule for Developers, SAP uses Constrained Decoding backed by a Rust parser to ensure the AI generates valid ABAP code, confirming the industry's use of "unforgiving compilers" as guardrails.

Summary

The mechanism is correctly identified as Neuro-symbolic AI:

- The Neural part (The AI): Provides the flexibility, intent understanding, and planning.

- The Symbolic part (Rust/SAP): Provides the hard constraints, logic, and "unforgiving" validation.

This combination ensures that the AI can be autonomous but never dangerous.

4.3 Human-in-the-Loop (HITL) by Design

The thesis highlights that "failure to process is a flag to bring in human expert." This operationalizes the Human-in-the-Loop concept not as a constant monitor, but as an Exception Handler.

When the AI hits a "hard stop" in SAP that it cannot resolve via its tools (e.g., a strategic decision to pay a vendor despite a discrepancy to maintain the relationship), it escalates. The human expert receives a structured task: "Agent blocked by Price Variance (USD100). Do you wish to override?"

This elevates the human role from data entry to strategic arbitration. The "hard" system ensures that the AI never acts autonomously in ambiguous or erroneous states.13

5. Grounding the Ghost: Structured Data and the SAP Knowledge Graph

The fourth pillar addresses the data itself. "Companies trying to implement Agentic AI without well-grounded ERP are trying to build floating castles in thin air." AI models require context to reason. Unstructured data (emails, PDFs) provides semantic richness but lacks structural integrity. SAP provides the structural skeleton of the enterprise.

5.1 The SAP Knowledge Graph

To make this structural richness accessible to AI, SAP has introduced the SAP Knowledge Graph.14

- The Problem: LLMs speak natural language. SAP speaks "technical codes" (Tables MARA, KNA1, BKPF). An LLM does not inherently know that KUNNR is a Customer Number or that a Sales Order Item connects to a Delivery Item via the Document Flow table VBFA.

- The Solution: The Knowledge Graph creates a semantic layer that maps technical entities to business concepts. It encodes the relationships: Customer --places--> Order --contains--> Material.

This "grounds" the AI. When an agent is asked, "Check the status of the order for Dallas," it doesn't guess. It traverses the graph: Customer(Dallas) -> SalesOrder -> Delivery -> GoodsIssue. This deterministic traversal prevents the agent from hallucinating relationships that don't exist.

5.2 Vector RAG and the HANA Vector Engine

SAP HANA Cloud’s Vector Engine enables Retrieval-Augmented Generation (RAG) that combines structured and unstructured data.15

- Structured Grounding: "Show me quality defects for Material X" (SQL Query to QMEL table).

- Unstructured Grounding: "Show me complaints where the customer mentioned 'strange smell'" (Vector search on text descriptions).

The combination allows agents to reason with high precision: "I found 5 complaints about 'smell' (Unstructured). All 5 are linked to Batch #992 (Structured). Conclusion: Batch #992 is defective."

Without the rigid link between the Complaint Notification and the Batch Record provided by the SAP data model, this correlation would be impossible to establish with certainty. The "hard" structure enables the "smart" reasoning.

6. The Technical Stack: Architecture of the Agentic Enterprise

To realize this thesis, organizations must deploy a specific technical architecture centered on the SAP Business Technology Platform (BTP). This stack bridges the gap between the rigid core and the fluid agent.

6.1 SAP Joule and the Orchestration Layer

SAP Joule serves as the primary interface and orchestrator.16 It is not merely a chatbot; it is a runtime environment that manages:

- Intent Recognition: Mapping user prompts to specific skills.

- Context Management: Keeping track of the session variables (e.g., "We are talking about Sales Order 123").

- Agent Dispatch: Routing tasks to the appropriate specialized agents (e.g., triggering the Cash Collection Agent).

6.2 Joule Studio and the Agent Builder

Joule Studio allows for the creation of custom agents using the Agent Builder.17 This low-code environment enables developers to define:

- Capabilities: What tools the agent can use (e.g., OData Service: API_PURCHASE_ORDER).

- Triggers: What events wake the agent up (e.g., Event Mesh: InvoiceCreated).

- Guardrails: What the agent is not allowed to do.

6.3 Connectivity: Model Context Protocol (MCP) and Headless Agents

A critical innovation is the Model Context Protocol (MCP).18 This protocol allows SAP agents to interact with external systems and tools in a standardized way.

- Use Case: An SAP agent needs to check a shipping rate from a logistics provider. Instead of a hard-coded interface, it uses an MCP server to query the provider dynamically.

- Headless vs. GUI Agents: The research distinguishes between two modes of agent operation:

- API-Based (Headless) Agents: These communicate via OData/REST APIs. They are fast, robust, and preferred for "Clean Core" environments.19

- GUI-Based Agents: For legacy ECC systems where APIs are missing, SAP GUI Advanced MCP Servers allow agents to drive the SAP GUI directly (scripting). This enables agents to perform "swivel chair" tasks on legacy screens, bridging the gap until migration is complete.20

7. Operationalizing the Agentic Enterprise: Use Cases

The synthesis of rigid structure and autonomous intelligence transforms key business functions.

7.1 Order-to-Cash (O2C): The Self-Driving Supply Chain

In O2C, agents reduce processing time by up to 70%.21

- Validation: Agents validate orders against contracts automatically.

- Stock Allocation: Instead of failing on a stock-out, an Inventory Agent performs a global Available-to-Promise (ATP) check across all plants, identifying potential transfers or substitutions, and proposing the optimal fulfillment path based on margin analysis.22

- Logistics: A Logistics Agent interacts with 3PL portals via MCP to schedule pickups, updating the SAP Delivery document with the tracking number and carrier details.

7.2 Finance: Dispute Resolution and Cash Collection

The Cash Collection Agent.11 proactively analyzes unpaid invoices.

- It detects a partial payment.

- It uses RAG to read the customer's email explanation ("Damaged goods").

- It correlates this with a Quality Notification in the system.

- Outcome: It autonomously proposes a credit memo for the damaged amount and clears the remaining balance, routing the proposal to a Finance Manager for one-click approval.

7.3 ESG and Sustainability: The Compliance Auditor Agent

Sustainability reporting (e.g., CSRD) is data-intensive and rigid.23 Sustainability Agents 24 act as auditors.

- They crawl the supply chain data in SAP.

- They chase suppliers for Scope 3 emissions certificates via email.

- They validate the certificates against the Sustainability Control Tower.25

- The "hard" validation ensures that the reported carbon numbers are traceable and auditable, preventing "greenwashing" liability.

7.4 Post-Merger Integration (M&A)

M&A integrations are notoriously difficult due to mismatched ERPs. PMI Agents 26 accelerate this.

- They "crawl" the legacy ERP and the target ERP.

- They identify semantic mappings (e.g., "Legacy Material Group 01 = SAP Material Group Z05").

- They automate the data migration and reconciliation, flagging anomalies for human review.

8. The Clean Core Imperative: Agents as Architects

The "Clean Core" strategy—keeping the ERP baseline free of custom modifications—is essential for Agentic AI.27 Custom "Z-code" is often opaque to standard agents.

However, agents are also the solution to this problem. ABAP AI Agents can assist in the migration.28

- Code Analysis: Agents scan millions of lines of legacy code.

- Refactoring: They identify non-compliant code (e.g., direct database updates) and rewrite it to use standard APIs or RAP (RESTful ABAP Programming) models.

- Documentation: They automatically generate documentation for undocumented legacy customizations.

Thus, the Agentic workforce helps build the "Clean Core" environment it requires to thrive.

9. Governance and the Future Workforce

The deployment of autonomous agents requires a new layer of governance.

9.1 Agent Mining

Just as Process Mining (e.g., SAP Signavio) is used to analyze human process adherence, Agent Mining 29 is used to monitor digital workers.

- Performance: Are agents getting stuck in loops?

- Compliance: Are agents attempting to access unauthorized data?

- Optimization: Agent Mining visualizes the "digital exhaust" of the agent interactions, allowing architects to fine-tune the prompts and tools.

9.2 The Shift to Supervision

The role of the human worker shifts from "Operator" to "Supervisor." The frustration of navigating "hard" SAP screens disappears, replaced by the natural language interface of Joule. The "hardness" remains, but it is pushed "under the hood," acting as the safety constraints for the agents. The human focuses on defining the goals and managing the exceptions escalated by the agents.

Conclusion: The Fortified Citadel

The reputation of SAP ERP systems as rigid, complex, and unforgiving is well-earned. For a human user, these traits are bugs. For an AI agent, they are features.

- The Granularity of RBAC provides the Security Containment needed for autonomous software.

- The Friction of exception handling provides the Orchestration Signals for agent collaboration.

- The Strict Validation provides the Hallucination Guardrails against generative error.

- The Structured Data provides the Grounding for deep reasoning.

Organizations that embrace this paradox—viewing the "Iron Cage" of SAP not as a prison for humans, but as a cradle for AI—will achieve levels of automation and agility that are impossible in less rigorous environments. They are building not "floating castles," but fortified, autonomous citadels of intelligence. The "hard to run" ERP is, in fact, the only ERP safe enough for the AI era.

End of Report.

Note: The insights presented are synthesized from the provided research materials, integrating technical specifications of SAP BTP, Joule, and industry analysis on Agentic AI trends.

References

-

Agentic AI in SAP Ecosystems - Smarter Enterprise Solutions, accessed January 19, 2026, https://adspyder.io/blog/agentic-ai-in-sap-ecosystems/ ↩

-

The Rise of Agentic AI ERP - Rimini Street, accessed January 19, 2026, https://www.riministreet.com/resources/whitepaper/the-rise-of-agentic-ai-erp/ ↩

-

How agentic AI is transforming IT: A CIO's guide - SAP, accessed January 19, 2026, https://www.sap.com/resources/how-agentic-ai-transforms-it-cio-guide ↩

-

Does AI Confabulate or Hallucinate? - testRigor AI-Based Automated Testing Tool, accessed January 19, 2026, https://testrigor.com/blog/does-ai-confabulate-or-hallucinate/ ↩

-

AI agents: Thinking fast, thinking slow - SAP, accessed January 19, 2026, https://www.sap.com/blogs/balancing-autonomy-determinism-when-applying-agentic-ai ↩

-

AI Agent RBAC: Essential Security Framework for Enterprise AI ..., accessed January 19, 2026, https://medium.com/@christopher_79834/ai-agent-rbac-essential-security-framework-for-enterprise-ai-deployment-d9d1d4711183 ↩ ↩2

-

Authorization in the Age of AI Agents: Beyond All-or-Nothing Access Control, accessed January 19, 2026, https://nwosunneoma.medium.com/authorization-in-the-age-of-ai-agents-beyond-all-or-nothing-access-control-747d58adb8c1 ↩

-

Setup Principal Propagation for SAP BTP - Simplifier Community, accessed January 19, 2026, https://community.simplifier.io/doc/installation-instructions/setup-external-identity-provider/setup-principal-propagation-for-sap-btp/ ↩

-

PFCG BASED AGENT RULE SET UP Step by Step 1694177786 | PDF - Scribd, accessed January 19, 2026, https://www.scribd.com/document/863648565/PFCG-BASED-AGENT-RULE-SET-UP-step-by-step-1694177786 ↩

-

Best Practices and 5 Use Cases of SAP BTP Integration Suite - LeverX, accessed January 19, 2026, https://leverx.com/newsroom/sap-btp-integration-suite-use-cases ↩

-

How SAP Uniquely Delivers AI Agents with Joule, accessed January 19, 2026, [https://news.sap.com/2025/02/joule-sap-uniquely-delivers-ai-agents/](https://news.sap.com/2025/02/joule-sap-uniquely-delivers-ai-agents/](https://news.sap.com/2025/02/joule-sap-uniquely-delivers-ai-agents/) ↩ ↩2 ↩3

-

AI Agents Use Cases in the Enterprise | SAP, accessed January 19, 2026, https://www.sap.com/hk/resources/ai-agents-use-cases ↩

-

How Agentic AI is Transforming Enterprise Platforms | BCG, accessed January 19, 2026, https://www.bcg.com/publications/2025/how-agentic-ai-is-transforming-enterprise-platforms ↩

-

What Is a Knowledge Graph? - SAP, accessed January 19, 2026, https://www.sap.com/resources/knowledge-graph ↩

-

Retrieval Augmented Generation (RAG) - SAP Architecture Center, accessed January 19, 2026, https://architecture.learning.sap.com/docs/ref-arch/e5eb3b9b1d/3 ↩

-

Joule, the AI Copilot for SAP - SAP Community, accessed January 19, 2026, https://pages.community.sap.com/topics/joule ↩

-

Joule Studio Agent Builder Hits General Availability, Signaling Shift in Agentic AI, accessed January 19, 2026, https://sapinsider.org/blogs/joule-studio-agent-builder-hits-general-availability-signaling-shift-in-agentic-ai/ ↩

-

Agent builder in Joule Studio is now generally ava... - SAP Community, accessed January 19, 2026, https://community.sap.com/t5/artificial-intelligence-blogs-posts/agent-builder-in-joule-studio-is-now-generally-available-build-your-own/ba-p/14289282 ↩

-

API Agents vs. GUI Agents: Divergence and Convergence - arXiv, accessed January 19, 2026, https://arxiv.org/html/2503.11069v1 ↩

-

SAP GUI AI Agent: Architecture and Technical Details, accessed January 19, 2026, https://community.sap.com/t5/artificial-intelligence-blogs-posts/sap-gui-ai-agent-architecture-amp-technical-details/ba-p/14032043 ↩

-

Introducing Generative and Agentic AI into the O2C Process - SSON, accessed January 19, 2026, https://www.ssonetwork.com/finance-accounting/articles/generative-agentic-ai-order-to-cash ↩

-

Order to Cash Automation with AI Agents | Beam AI, accessed January 19, 2026, https://beam.ai/use-cases/order-to-cash ↩

-

AI-Driven ESG Reporting: How Agentic AI Can Cut Disclosure Prep from Weeks to Hours, accessed January 19, 2026, https://www.superteams.ai/blog/ai-driven-esg-reporting-how-agentic-ai-can-cut-disclosure-prep-from-weeks-to-hours ↩

-

AI Agents in Sustainability Reporting: Powerful Wins | Digiqt Blog, accessed January 19, 2026, https://digiqt.com/blog/ai-agents-in-sustainability-reporting/ ↩

-

SAP Sustainability Control Tower, accessed January 19, 2026, https://www.sap.com/products/scm/sustainability-control-tower.html ↩

-

Pharma IT Integration Playbook: Consolidating Veeva and SAP | IntuitionLabs, accessed January 19, 2026, https://intuitionlabs.ai/articles/pharma-it-integration-veeva-sap ↩

-

SAP Clean Core Strategy For SAP Cloud ERP And Technical Debt ..., accessed January 19, 2026, https://www.redwood.com/article/sap-clean-core-strategy-cloud-erp/ ↩

-

SAP BTP‚ ABAP environment, Joule for developers‚ ABAP AI capabilities, accessed January 19, 2026, https://www.sap.com/products/technology-platform/btp-abap-environment-joule-for-developers-abap-ai-capabilities.html ↩

-

Unleashing the full potential of AI agents with SAP Signavio- SAP ..., accessed January 19, 2026, https://www.signavio.com/post/unleashing-the-full-potential-of-ai-agents-with-sap-signavio/ ↩

The Keyboard as an Instrument: A Comprehensive Analysis of Vim’s Modal Paradigm, Evolution, and Future in the Age of Artificial Intelligence

1. Introduction: The Interface as an Extension of the Mind

The relationship between a human creator and their tool is defined by the transparency of the medium. For a musician, the instrument—whether a Stradivarius violin, a Fender Stratocaster, or a Steinway grand piano—ceases to be a separate object during the act of performance. It becomes an extension of the body, a conduit through which abstract musical ideas flow into physical reality without the friction of conscious mechanical thought. In the realm of text editing and software development, the keyboard occupies this same role. Yet, for the vast majority of computer users, the keyboard remains a typewriter: a static device for character-by-character insertion, a legacy of the mechanical era.

This report explores the thesis that Vim (Vi IMproved) and its predecessor Vi are not merely software applications for manipulating ASCII text, but represent a distinct, highly evolved philosophy of Human-Computer Interaction (HCI). This philosophy treats text editing not as a linear process of insertion, but as a structural, grammatical interaction with information. By converting the keyboard from a simple input device into a modal control surface—comparable to the distinct configurations of a musical instrument—Vim allows the user to transcend mechanical limitations.

The user query posits that Vim transforms the keyboard into "something like a musical instrument," specifically a guitar or a "supercharged piano." This metaphor is not merely poetic; it is structurally accurate. Just as a guitar requires the player to manipulate the fretboard (mode) before striking the string (action), Vim requires the user to manipulate the mode (Normal, Visual, Command) to define the interpretation of the keystroke. We will examine the historical evolution of this paradigm from the constraints of 300-baud teleprinters in the 1970s to the high-bandwidth cognitive demands of modern AI-assisted development. We will analyze the "grammar" of Vim—its verbs, nouns, and motions—as a linguistic system that enables "flow state," a psychological phenomenon of optimal experience described by Csikszentmihalyi.

Furthermore, we will trace the proliferation of the "Vi Way" into tools beyond text editors, such as browsers (Vimium), spreadsheets (VisiData), and file managers (Ranger). Finally, we will rigorously investigate the role of this keyboard-centric mastery in the emerging era of generative AI, arguing that the ability to manipulate text with virtuosity becomes more, not less, critical as AI shifts the developer's role from writer to editor. The "true coder" is not defined by the ability to type code, but by the ability to manipulate the logic of the machine with the speed of thought—a capability that Vim, and now AI-augmented Vim tools like Cursor, uniquely provides.

2. The Psychology of the Interface: Flow, Worship, and the Instrument

To understand why Vim is described as a "worship tool" where the mind translates ideas "frictionlessly," we must look beyond software engineering into cognitive psychology and the phenomenology of skill acquisition. The comparison to musical instruments highlights a fundamental divergence in interface design: the difference between "ease of use" (low barrier to entry) and "ease of expression" (high ceiling of mastery).

2.1 The Cognitive Mechanics of Flow State

Flow state, or optimal experience, is a mental state of high concentration and enjoyment characterized by complete absorption in an activity.1 In this state, the self-consciousness of the practitioner dissolves, and the action becomes autotelic—performed for its own sake. For musicians, this is the moment where the mechanics of playing (finger placement, breath control) disappear, leaving only the music. For programmers, this is the state where code flows from the mind to the screen without the interruption of interface friction.

Research indicates that Flow requires a balance between the challenge of a task and the skill of the person performing it.2 If the interface presents a barrier—such as the need to move a hand from the keyboard to the mouse to highlight a block of text—the micro-interruption breaks the feedback loop, potentially collapsing the Flow state. Vim aims to reduce this friction to zero. By keeping the hands on the home row and providing a language that matches the user's semantic intent (e.g., "delete this paragraph" becomes dap), Vim minimizes the cognitive load of translation between thought and action.

| Feature of Flow State | Musical Performance Context | Vim Editing Context |

|---|---|---|

| Action-Awareness Merging | The musician is one with the instrument; fingers move subconsciously. | The coder is one with the editor; text manipulation occurs at "thought speed." |

| Clear Goals | The sheet music or improvisation structure provides immediate targets. | The editing task (e.g., "rename variable") is a clear, immediate goal. |

| Immediate Feedback | The sound is heard instantly; wrong notes are immediately obvious. | The text changes instantly; the modal cursor provides visual feedback of state. |

| Sense of Control | Mastery over the instrument allows for precise expression of nuance. | "God-mode" control over the text buffer; ability to manipulate massive structures instantly. |

| Loss of Self-Consciousness | The ego disappears; only the performance remains. | The interface disappears; only the logic and architecture remain. |

Table 1: Parallels between Musical Flow and Vim Flow based on Csikszentmihalyi’s criteria.1

2.2 The Keyboard as a Physical Medium

The "worship" of the keyboard mentioned in the prompt reflects a reverence for the physical connection to the machine. In the Vim paradigm, the keyboard is not just a grid of buttons; it is a topography. Muscle memory plays a critical role here. Musicians rely on proprioception—the sense of the relative position of one's own body parts—to find notes without looking.4 Similarly, a Vim master relies on the proprioceptive certainty of the H, J, K, and L keys on the home row.

Studies on flow in musicians suggest that during optimal performance, only the muscles necessary for movement are engaged, while others relax.4 The standard computing interface, which requires frequent excursions to the mouse or trackpad, necessitates large, gross motor movements of the shoulder and arm. Vim, by contrast, restricts movement to the fine motor control of the fingers. This economy of motion reduces physical fatigue and keeps the user physically centered, reinforcing the "meditative" or "worship-like" quality of the interaction.

The comparison to a guitar is particularly apt regarding chordal input. While a piano offers a linear layout, a guitar requires the left hand to form a shape (the chord) while the right hand activates it (strumming). Vim's "Command Mode" and "Normal Mode" combinations often function like chords. Pressing Ctrl+V (visual block) followed by Shift+I (insert) creates a state where typing a single character replicates it across multiple lines simultaneously. This is a harmonic action—a single input resonating across the vertical axis of the text.

3. The Archeology of Efficiency: From Ed to Vim