The Industrialization of Reality: Mosaic AI, the C2PA Failure, and the Nostr Solution

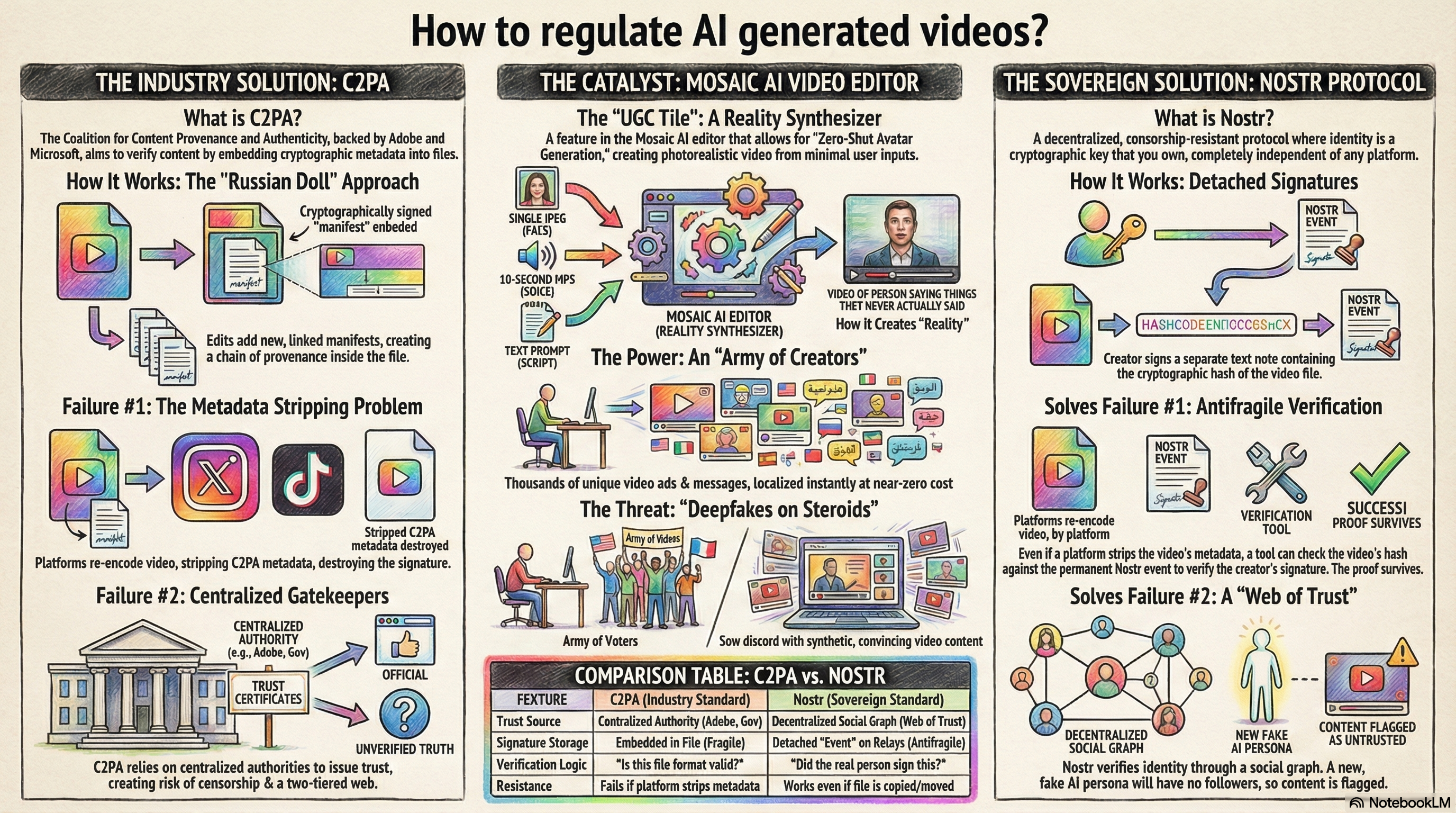

The digital media landscape has crossed a threshold of "Zero-Shot" creation. With tools like Mosaic AI, the capability to generate photorealistic, lip-synced video content of a human subject using only a single static image and a brief voice sample has become democratized. This is no longer the domain of Hollywood visual effects studios or state-level actors; it is a feature available to any marketer or creator via a "UGC Tile" in a browser-based editor.

This report analyzes three distinct aspects of this paradigm shift:

The Breakthrough: Acknowledging Mosaic AI not just as an editor, but as a "reality synthesizer" that offers infinite scalability for creators and brands.

The Threat: The implications of "deepfakes on steroids," where the line between authentic human expression and algorithmic manipulation is erased, posing unique risks to public discourse.

The Solution: A critical comparison of the industry-standard "Content Credentials" (C2PA) versus the decentralized Nostr protocol. This report argues that C2PA's centralized, metadata-dependent architecture is insufficient for the modern web, and that Nostr’s cryptographic "Web of Trust" offers the only viable path for verifying truth in an age of infinite synthetic media.

Chapter 1: The Breakthrough – Democratizing the "Human" Element

To understand the magnitude of Mosaic AI, we must move beyond the previous definition of "video editing." The founders, Adish Jain and Kyle Wade, have not simply built a faster way to cut clips; they have successfully decoupled human presence from human labor.

The digital media landscape has crossed a threshold of "Zero-Shot" creation. With tools like Mosaic AI, the capability to generate photorealistic, lip-synced video content of a human subject using only a single static image and a brief voice sample has become democratized. This is no longer the domain of Hollywood visual effects studios or state-level actors; it is a feature available to any marketer or creator via a "UGC Tile" in a browser-based editor.

This report analyzes three distinct aspects of this paradigm shift:

The Breakthrough: Acknowledging Mosaic AI not just as an editor, but as a "reality synthesizer" that offers infinite scalability for creators and brands.

The Threat: The implications of "deepfakes on steroids," where the line between authentic human expression and algorithmic manipulation is erased, posing unique risks to public discourse.

The Solution: A critical comparison of the industry-standard "Content Credentials" (C2PA) versus the decentralized Nostr protocol. This report argues that C2PA's centralized, metadata-dependent architecture is insufficient for the modern web, and that Nostr’s cryptographic "Web of Trust" offers the only viable path for verifying truth in an age of infinite synthetic media.

Chapter 1: The Breakthrough – Democratizing the "Human" Element

To understand the magnitude of Mosaic AI, we must move beyond the previous definition of "video editing." The founders, Adish Jain and Kyle Wade, have not simply built a faster way to cut clips; they have successfully decoupled human presence from human labor.

1.1 The "UGC Tile": A Technical Marvel

The user query correctly identifies the "UGC Tile" as the crown jewel of the Mosaic platform. Technically, this represents a massive leap in Zero-Shot Avatar Generation. Inputs: A single JPEG (the "Face") + A 10-second MP3 (the "Voice") + A Text Prompt (the "Script"). The Process: The AI agent analyzes the facial geometry from the 2D image, infers depth maps, and rigs a 3D mesh. It simultaneously clones the prosody and timbre of the voice sample. It then animates the mesh to synchronize lip movements (visemes) and facial expressions with the generated audio. The Output: A photorealistic video of a person saying things they never actually said, indistinguishable to the casual observer from a camera recording.

1.2 The "Army of Creators"

We must congratulate the founders on solving the "scalability of self." For a brand founder, this is a superpower. Infinite Scale: A founder can now record one voice sample and upload one photo. They can then generate 1,000 unique video ads, testing 1,000 different marketing angles, without ever turning on a camera again. Localization: The same static photo can be made to speak Spanish, Japanese, or Hindi fluently, breaking down language barriers instantly. Cost Reduction: The cost of video production collapses from thousands of dollars (cameras, lights, actors, time) to pennies (compute cost). This is a net positive for economic efficiency and creative iteration. Chapter 2: The Threat – Deepfakes on Steroids While the economic benefits are clear, the societal implications are profound. If a "real" video can be synthesized from a single photo found on LinkedIn, the concept of "video evidence" is effectively dead.

2.1 Beyond Advertising: The Manipulation of Opinion

If Mosaic can generate an "Army of Creators" to sell a toothbrush, a bad actor can generate an "Army of Voters" to sell a political narrative. Synthetic Consensus: An entity could generate 10,000 unique videos of "concerned citizens"—diverse ages, races, and backgrounds—all reading from a script designed to sow discord or push a specific policy. The "Flood" Strategy: Because the cost of generation is near-zero, the internet can be flooded with so much synthetic noise that finding the "signal" (authentic human speech) becomes impossible.

2.2 The Verification Crisis

In this environment, how do we know if a video of a CEO announcing a merger, or a politician declaring war, is real? Visual Forensics Fail: We can no longer rely on "looking closely." The AI models are improving faster than human perception. The Default Assumption: As the user noted, we must move to a world where "Everything is presumed AI-generated advertising unless proven otherwise." Chapter 3: The Current Industry Solution – C2PA (and Why It Fails) The technology industry's primary response to this crisis is the Coalition for Content Provenance and Authenticity (C2PA). Backed by Adobe, Microsoft, and Intel, it attempts to solve the problem using "Content Credentials." While well-intentioned, it suffers from fatal architectural flaws.

3.1 How C2PA Works

C2PA uses a "Russian Doll" approach to metadata. Embed: When a file is created (by a camera or AI tool), a "manifest" is embedded in the file header. Sign: This manifest is cryptographically signed using X.509 certificates (similar to SSL for websites). Chain: If the file is edited in Photoshop, a new manifest is added, linking back to the original.

3.2 Failure Mode 1: The "Metadata Stripping" Problem

C2PA relies on the File being the carrier of truth. The Reality: When you upload a video to X (Twitter), Instagram, or WhatsApp, the platform re-encodes the video to save bandwidth. This process strips out all metadata, including the C2PA signature. The Result: The video arrives on the viewer's screen as "Orphan Data." The signature is gone. The viewer has no way to verify it. C2PA proposes "cloud recovery" (checking a central database), but this relies on the file hash remaining identical, which re-encoding breaks.

3.3 Failure Mode 2: Centralized Gatekeepers (PKI)

C2PA relies on Public Key Infrastructure (PKI). To be "trusted," you must have a certificate issued by a "Certificate Authority" (CA) like Adobe or a government body. Censorship Risk: Who decides who gets a "Verified Human" certificate? A government could revoke the certificates of dissident journalists, rendering their real videos "unverified." The "Blue Check" Problem: It creates a two-tier web: "Institutional Truth" (CNN, BBC, Adobe) vs. "Unverified Masses." It does not empower the individual creator. Chapter 4: The Sovereign Fix – The Nostr Protocol To truly solve the "Mosaic Problem"—where anyone can fake anyone—we need a solution that is resistant to platform stripping and independent of central authorities. This is where Nostr (Notes and Other Stuff Transmitted by Relays) succeeds.

4.1 Detached Signatures: The "Event" is the Truth

Nostr separates the Signature from the File. The Workflow: Instead of embedding the signature inside the video file (where it gets deleted), the creator signs a Nostr Event (a text note). NIP-94 (File Integrity): This event contains the SHA-256 hash of the video file. The Link: The creator posts this event to the network. "I, [User Public Key], attest that I created the video with Hash [X]." Resilience: Even if Instagram strips the metadata from the video file, the Nostr Event remains on the relays, immutable and signed. A browser plugin or client can calculate the hash of the video you are watching and check if a valid Nostr event exists for it.

4.2 Web of Trust (WoT): Solving the "Fake Persona" Problem

Mosaic can generate 1,000 fake faces. C2PA might verify that "Camera X created this," but it can't tell you if the person is a trusted entity. Nostr uses a Social Graph trust model. The Problem: A bad actor uses Mosaic to generate a fake "Adish Jain" video. They sign it with a new Nostr key. The Defense: My Nostr client asks: "Does anyone I follow trust this new key?" Answer: No. This key has 0 followers in my network. Result: The video is flagged as "Untrusted / Possible AI Slop." The Reality: The real Adish Jain signs his videos with his established Private Key, which is followed by thousands of real people. Even if the AI clone looks perfect, the Key Signature will fail the social verification.

4.3 Summary of the Fix

Feature C2PA (Industry Standard) Nostr (Sovereign Standard) Trust Source Centralized Authority (Adobe, Gov) Decentralized Social Graph (Web of Trust) Signature Storage Embedded in File (Fragile) Detached "Event" on Relays (Antifragile) Verification Logic "Is this file format valid?" "Did the real person sign this?" Resistance Fails if platform strips metadata Works even if file is copied/moved

Conclusion

The "UGC Tile" in Mosaic AI is a triumph of engineering that marks the end of the "Seeing is Believing" era. We have entered a time where reality is a programmable asset. We cannot stop this technology, nor should we demonize the founders for building it. However, we must adapt our "immune system" for truth. The current industry solution, C2PA, is a fragile patch on a broken model. Nostr is the necessary evolution. By shifting trust from the File (which can be faked) to the Cryptographic Identity (which cannot), we build a web where "thousands of AI personas" are visible for what they are: noise. Only the signed signal remains.

References

- Mosaic AI: edit.mosaic.so

- NIP-94 Specification: File Metadata & Integrity

- C2PA: Coalition for Content Provenance and Authenticity