The Taxonomy of Intent: Applying Prompt Engineering 2.0 Frameworks to Highly Stylized Narrative Generation

Abstract

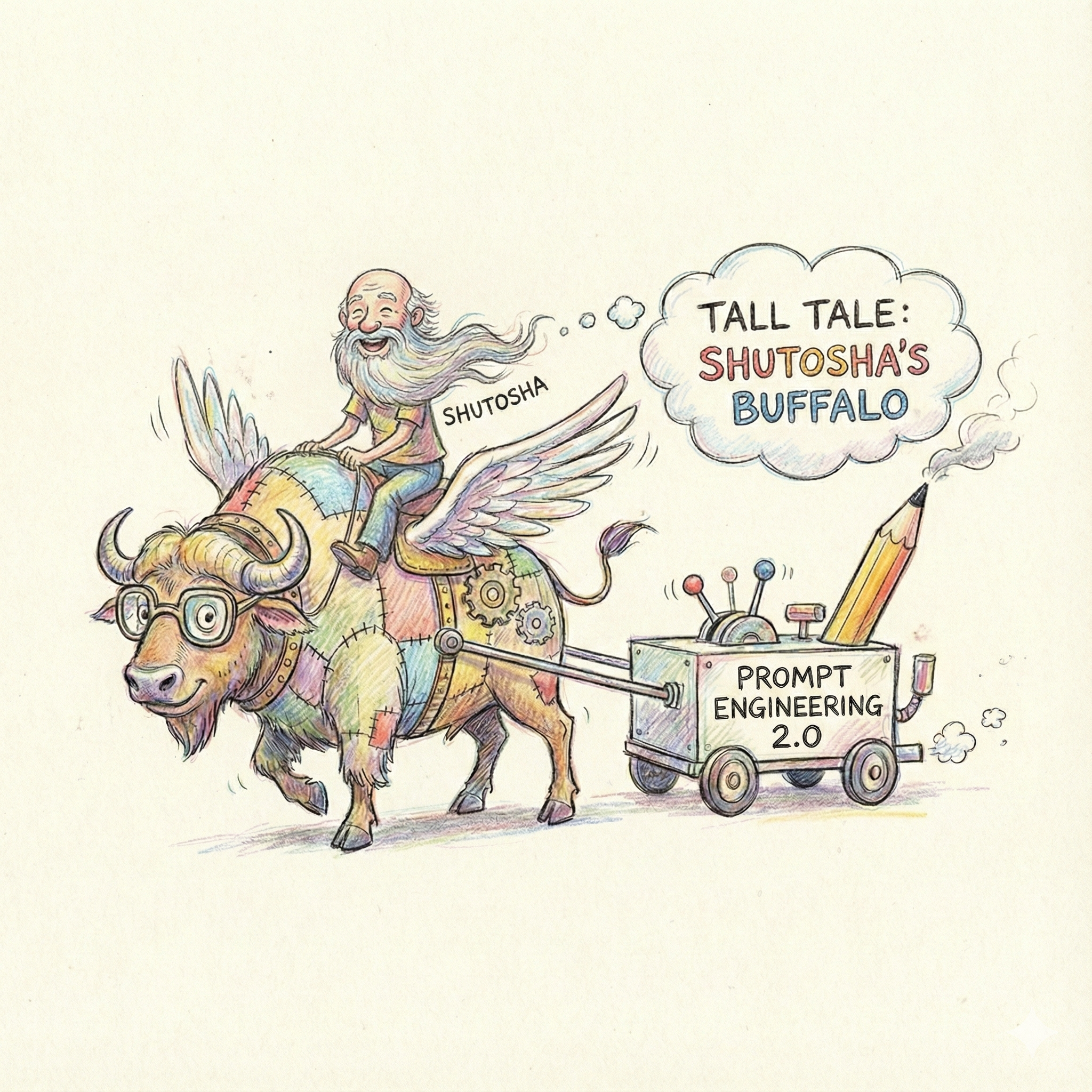

The disciplined practice of Prompt Engineering 2.0 (PE 2.0) is necessary to mitigate the pervasive issue of "AI Slop"—low-quality, repetitive synthetic media—by transforming user input from vague description to structured protocol. This paper examines three core PE 2.0 frameworks—Role-Task-Format (RTF), CREATE, and CO-STAR—and demonstrates their application in generating highly specific, nuanced content. Using the narrative of “Shutosha’s Buffalo,” a colloquial, hyperbole-driven "Maha-Shootri" (tall tale), this analysis illustrates how structured prompting ensures fidelity to tone, humor, and linguistic complexity, yielding high-quality, non-straightforward outputs.

1. Introduction: The Crisis of Algorithmic Entropy

The reliance on unstructured, conversational "Descriptive Prompting" (termed Prompt Engineering 1.0) often results in outputs that default to the probabilistic average of the internet, leading to content described as "banal, repetitive, and devoid of specific intent"—or "slop". PE 2.0 addresses this by treating the prompt as a Dynamic Protocol, a set of instructions that programs the model’s latent space rather than merely asking a question. This approach leverages structured interaction frameworks to constrain the model’s search space, forcing it to produce high fidelity and utility results. The underlying theory is that the user must provide the "syntax tree" for the task, much like parsing the famous "Buffalo" sentence, ensuring the AI can differentiate the user’s intent from linguistic noise.

The challenge of recreating a nuanced piece of creative content, such as the "Maha-Shootri" of “Shutosha’s Buffalo”, serves as an ideal case study. This tale requires adherence to an exaggerated, comedic style, specific character roles, and a particular cultural register (South Asian humor).

2. Framework Applications for "Shutosha's Buffalo"

To ensure the AI produces the story with the requisite tone, humor, and precise structure, a combination of PE 2.0 frameworks must be employed. These frameworks operate at the Prompt/Context and Cognition layers of the Agentic Alignment Stack.

2.1. Role-Task-Format (RTF): Enforcing Structural Integrity

The RTF structure is the "workhorse" of PE 2.0, providing focused and professional results by defining the AI’s identity, required action, and output structure. By explicitly defining the format, RTF prevents "structural slop," where the right information is delivered in the wrong shape.

Application to "Shutosha's Buffalo" Narrative:

| RTF Component | Specific Instruction for Maha-Shootri | PE 2.0 Rationale |

|---|---|---|

| Role (R) | Act as a master creative storyteller and scriptwriter, specializing in highly exaggerated, dramatic, and colloquial Urdu/Hindustani prose. | Role Priming reliably lifts output quality by setting the tone and knowledge base. |

| Task (T) | Retell the complete story contained in the source, preserving all key plot points and the sequence of events exactly as written. | Uses action-oriented language to guide the AI, crucial for avoiding vague results. |

| Format (F) | Output must be delivered entirely in Urdu, maintaining the dramatic, bold headings (like दंगल शुरू), and using emojis where appropriate. | Explicit format cues reduce hallucination and ensure immediate usability in downstream applications. |

2.2. CREATE: Cultivating Constrained Creativity and Tone

The CREATE framework (Character, Request, Examples, Adjustments, Type, Extras) is highly effective for creative tasks, specifically because defining the Character activates relevant vocabulary sets in the LLM, preventing the "blandness" of standard AI text.

Application to "Shutosha's Buffalo" Narrative:

| CREATE Component | Specific Instruction for Maha-Shootri | PE 2.0 Rationale |

|---|---|---|

| Character (C) | Defined as a storyteller of comedic folk legends, specializing in the "महा-शुट्री" style. | Ensures the style aligns with the intended dramatic and humorous genre. |

| Adjustment (A) | Maintain the exaggerated, over-the-top, and highly comedic tone. Ensure the buffalo's dialogue is included and delivered in its "deep, philosophical voice". | Negative constraints and specific stylistic mandates tighten the boundary of the required output. |

| Examples (E) (Implicit in the story itself) | The source text provided serves as a few-shot example of the extreme hyperbole desired (e.g., the Earth criticizing the road quality; the village plunging into darkness). | Examples are the most powerful steering mechanism for aligning the model’s internal weights to the desired style. |

2.3. CO-STAR: Contextualizing Cultural Nuance

The CO-STAR framework (Context, Objective, Style, Tone, Audience, Response) is the gold standard for complex, high-stakes tasks, specifically designed to address hallucination and irrelevance by emphasizing heavy context.

Application to "Shutosha's Buffalo" Narrative:

| CO-STAR Component | Specific Instruction for Maha-Shootri | PE 2.0 Rationale |

|---|---|---|

| Context (C) | The underlying material is a "Maha-Shootri," a tall tale characterized by hyperbole and South Asian humor. | Grounding the model in the specific genre prevents the model from generating a generic Western-style joke. |

| Objective (O) | Retell the narrative in Urdu/Hindustani prose while maintaining fidelity to the original punchlines (e.g., the "Cow-lipse"). | Ensures the model focuses on the required goal, not tangential elaborations. |

| Style (S) & Tone (T) | Style must be "महा-शुट्री"; Tone must be exaggerated, dramatic, and colloquial. | Constraining Style and Tone reduces the entropy of word choice and prevents "synthetic filler" typical of default AI responses. |

2.4. Chain-of-Thought (CoT): Ensuring Coherence

While often associated with analytical tasks, Chain-of-Thought prompting, which breaks down complex tasks into step-by-step processes, is crucial for maintaining narrative fidelity. By requiring the AI to adhere to the exact sequence of events in the source, CoT principles prevent Structural Incoherence—narratives that dissolve into randomness—by acting as a functional checklist. The instruction to deliver the output by sequentially rewriting each section ensures the logical flow (stroll meet buffalo chase philosophical question blackout) is preserved.

3. Conclusion

Prompt Engineering 2.0 frameworks—RTF, CREATE, CO-STAR, and CoT—are essential tools for moving beyond generic content and achieving high-fidelity, goal-oriented outputs. By layering instructions for Role, Tone, and Format, the AI is programmed to produce a unique artifact, rather than "slop". The successful reproduction of the exaggerated style and cultural specificity of “Shutosha’s Buffalo” demonstrates the power of constructing replicable and disciplined cognitive workflows.

Example Output: The Tall Tale Retained

The following is the structured English translation of the story (the original was generated in Urdu using the structured prompt), demonstrating the fidelity to tone, humor, and format specified by the PE 2.0 frameworks.

🐃🌪️ Shutosha and the Amazing Buffalo—A Mega-Epic (Maha-Shootri)

So this is what happened: one morning Shutosha decided,

“Today, I’ll just go out for a stroll.”

But Fate, that day, was hungry—it dropped the entire tandoor (oven) of the story right in their path.

On the road, his eyes fell upon a buffalo.

And not just any ordinary buffalo—

She was so heavy that the Earth said, “Sister, walk slowly… I’m a government road; I haven't cracked yet!”

Shutosha thought,

“Come on, let’s pet her a little.”

But the buffalo showed such a mood swing

that even the folks at NASA said, “This is beyond our rocket science.”

The buffalo took a deep breath—

So sharp that the nearby tree shook and declared,

“Brother, I’m already cleaned up before autumn even starts!”

🌩️ The Great Showdown Begins

Suddenly, the buffalo turned around and gave a long look,

as if she was saying:

“Shutosha… let's have your cardio class today.”

And zoom!

She fled straight toward the West.

So fast that the pole nearby said,

“I am government property… but even I don't get this much downtime!”

Shutosha didn't back down either—

He ran after her!

His slipper picked up such speed that the wind screamed from behind:

“Bro, think of the speed limit! I'll get a ticket!”

🔥 A Moment in History

The buffalo suddenly hit the brakes and stopped—

So abruptly that a mouse commented:

“If I got that braking skill, I'd compete in Formula 1!”

Shutosha went closer and asked:

“Why, you? What’s the issue?”

The buffalo said in a deep, philosophical voice—

(Yes, in this epic, the buffalo talks—and fluently at that.)

“Shutosha brother, the sun is very strong today.

I thought you could become a tree and give me some shade.”

Shutosha was so astonished

that the Earth chuckled and said,

“This is going to be in the books, man!”

🌙 And then came the moment that plunged history into darkness

Shutosha said, “Just move aside a bit.”

But the buffalo was so massive that

just by shaking her head—

The entire village plunged into darkness!

The villagers yelled:

“Oh! Solar eclipse! Solar eclipse!”

The Pandit (priest) climbed onto the roof and announced:

“Not an eclipse! This is a Buffalo Eclipse—the Cow-lipse!”

🌟 In the End…

The friendship between Shutosha and the buffalo became a legend.

People still say today—

“When the sun sets, night falls…

but if the buffalo shifts—

the entire district suffers a blackout!”